I am trying to parallelize some code used in the Gauss-Seidel algorithm to approximate the solution of a Linear Equation System.

In brief, for an NxN matrix, during one iteration, I am doing sqrt(N) sessions of parallel computation, one by one. During one session of parallel computation, I distribute the task of calculating sqrt(N) values from a vector among the available workers.

The code involved in a parallel computation session is this:

future_results(1:num_workers) = parallel.FevalFuture;

for i = 1:num_workers

start_itv = buck_bound+1 + (i - 1) * worker_length;

end_itv = min(buck_bound+1 + i * worker_length - 1, ends_of_buckets(current_bucket));

future_results(i) = parfeval(p, @hybrid_parallel_function, 3, A, b, x, x_last, buck_bound, n, start_itv, end_itv);

end

for i = 1:num_workers

[~, arr, start_itv, end_itv] = fetchNext(future_results(i));

x(start_itv:end_itv) = arr;

end

The function called by parfeval is this:

function [x_par, start_itv, end_itv] = hybrid_parallel_function (A, b, x, x_last, buck_bound, n, start_itv, end_itv)

x_par = zeros(end_itv - start_itv + 1, 1);

for i = start_itv:end_itv

x_par(i-start_itv+1) = b(i);

x_par(i-start_itv+1) = x_par(i-start_itv+1) - A(i, 1:buck_bound) * x(1:buck_bound);

x_par(i-start_itv+1) = x_par(i-start_itv+1) - A(i, buck_bound+1:i-1) * x_last(buck_bound+1:i-1);

x_par(i-start_itv+1) = x_par(i-start_itv+1) - A(i, i+1:n) * x_last(i+1:n);

x_par(i-start_itv+1) = x_par(i-start_itv+1) / A(i, i);

end

end

The entire code can be found here: https://pastebin.com/hRQ5Ugqz

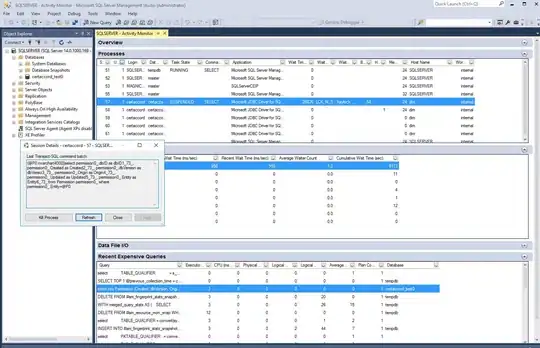

The matlab profiler for a 1000x1000 matrix. The parallel code is between 20 to 135 times slower than its serial counterpart, depending on the chosen coefficient matrix (and still much faster than spmd).

The parfeval computation might be lazily split between the lines 50 and 57? Still, I cannot explain to myself why there is this major overhead. It seems to have something to do with the number of times parfeval is called: I did lower the execution time by lowering the parfeval calls.

Is there something that can be further optimized? Do I have to resort to writing the code in C++?

Please help. Thank you very much!