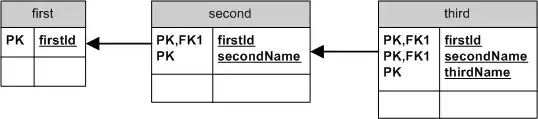

I'm using LambdaLR as a learning rate function:

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

model = torch.nn.Linear(2, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

lambda1 = lambda epoch: 0.99 ** epoch

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1, last_epoch = -1)

lrs = []

for i in range(2001):

optimizer.step()

lrs.append(optimizer.param_groups[0]["lr"])

scheduler.step()

plt.plot(lrs)

I'm trying to set a min learning rate so it won't go to 0. How can I do that?