So I'm trying to parallelize the process using the dask cluster. Here's my try.

Getting clusters ready:

gateway = Gateway(

address="http://traefik-pangeo-dask-gateway/services/dask-gateway",

public_address="https://pangeo.aer-gitlab.com/services/dask-gateway",

auth="jupyterhub",

)

options = gateway.cluster_options()

options

cluster = gateway.new_cluster(

cluster_options=options,

)

cluster.adapt(minimum=90, maximum=100)

client = cluster.get_client()

cluster

client

Then I have a function which will load files from S3 and process it and then upload it back to different s3 bucket.

Function process GOES data and select specific region from it and save that to nc file then to S3:

def get_records(rec):

d=[rec[-1][0:4], rec[-1][4:6], rec[-1][6:8], rec[-1][9:11], rec[-1][11:13]]

yr=d[0]

mo=d[1]

da=d[2]

hr=d[3]

mn=d[4]

ps = s3fs.S3FileSystem(anon=True)

period = pd.Period(str(yr)+str('-')+str(mo)+str('-')+str(da), freq='D')

dy=period.dayofyear

print(dy)

cc=[7,8,9,10,11,12,13,14,15,16] #look at the IR channels only for now

dy="{0:0=3d}".format(dy)

# this loop is for 10 different channels

for c in range(10):

ch="{0:0=2d}".format(cc[c])

# opening 2 different time slices of given particular record

F1=xr.open_dataset(ps.open(ps.glob('s3://noaa-goes16/ABI-L1b-RadF/'+str(yr)+'/'+str(dy)+'/'+str("{0:0=2d}".format(hr))+'/'+'OR_ABI-L1b-RadF-M3C'+ch+'*')[-2]))[['Rad']]

F2=xr.open_dataset(ps.open(ps.glob('s3://noaa-goes16/ABI-L1b-RadF/'+str(yr)+'/'+str(dy)+'/'+str("{0:0=2d}".format(hr))+'/'+'OR_ABI-L1b-RadF-M3C'+ch+'*')[-1]))[['Rad']]

# Selecting data as per given record radiance

G1 = F1.where((F1.x >= (rec[0]-0.005)) & (F1.x <= (rec[0]+0.005)) & (F1.y >= (rec[1]-0.005)) & (F1.y <= (rec[1]+0.005)), drop=True)

G2 = F2.where((F2.x >= (rec[0]-0.005)) & (F2.x <= (rec[0]+0.005)) & (F2.y >= (rec[1]-0.005)) & (F2.y <= (rec[1]+0.005)), drop=True)

# Concating 2 time slices togethere

G = xr.concat([G1, G2], dim = 'time')

# Concatiating different channels

if c == 0:

T = G

else:

T = xr.concat([T, G], dim = 'channel')

# Saving into nc file and storing them to S3

path = rec[-1]+'.nc'

T.to_netcdf(path)

fs.put(path, bucket+path)

Using dask to run them parallely. I'm making all cluster run 50 files once then clearing there memory and running it again for next 50 file

for j in range(0, len(records), 50):

files = []

for i in range(j, j+50):

s3_ds = dask.delayed(get_records)(records[i])

files.append(s3_ds)

files = dask.compute(*files)

client.restart()

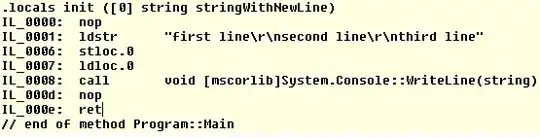

So now the problem is my cluster will process files for a while, like I have 10 clusters running, So after a while one by one they'll just stop processing the data and will sit ideally, even though they have memory left in them. They won't do anything. They'll process 20-30 files and then do nothing. So I tried giving just 20 files at once, then they'll stop processing after 10-12 files. Below I have attached image how some cluster sit ideally even though they have memory. And the main thing is like couple of weeks before I was running the same code and it was running perfectly fine. I don't know what's the problem now.