It is possible for AutoML tables to export a tf saved model (.pb format). You can do this by sending a request using curl. See Exporting Models in AutoML Tables on

detailed instructions on how to use models.export.

NOTE: Exported model used for testing is from AutoML Tables quickstart.

JSON request (Use tf_saved_model to export a tensorflow model in SavedModel format):

{

"outputConfig": {

"modelFormat": "tf_saved_model",

"gcsDestination": {

"outputUriPrefix": "your-gcs-destination"

}

}

}

Curl command (assuming that the model is in us-central1):

curl -X POST \

-H "Authorization: Bearer "$(gcloud auth application-default print-access-token) \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

https://automl.googleapis.com/v1beta1/projects/your-project-id/locations/us-central1/models/your-model-id:export

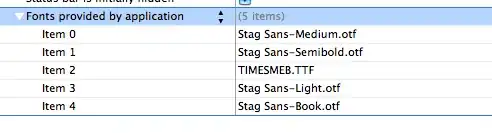

Exported model: