I want to perform Machine Learning algorithms from Sklearn library on all my cores using Dask and joblib libraries.

My code for the joblib.parallel_backend with Dask:

#Fire up the Joblib backend with Dask:

with joblib.parallel_backend('dask'):

model_RFE = RFE(estimator = DecisionTreeClassifier(), n_features_to_select = 5)

fit_RFE = model_RFE.fit(X_values,Y_values)

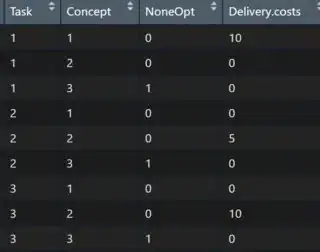

Unfortunetly when I look at my task manager I can see all my workers chillin and doing nothing, and only 1 new Python task is doing all the job:

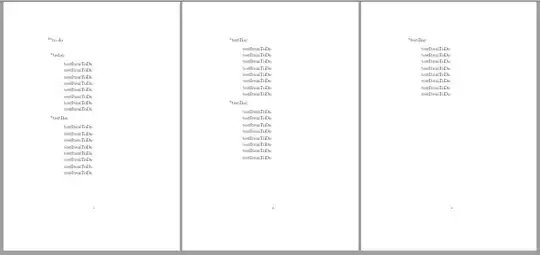

Even in my Dask visualization on Client I see the workers doing nothing:

- Can you please tell me what am I doing wrong?

- Is it my code (whole code below)?

- I really just wanna run ML in parallel for speed up. If I don't need to use

joblibI would welcome any other ideas.

My whole code attempt following this tutorial from docs:

import pandas as pd

import dask.dataframe as df

from dask.distributed import Client

import sklearn

from sklearn.feature_selection import RFE

from sklearn.tree import DecisionTreeClassifier

import joblib

#Create cluset on local PC

client = Client(n_workers = 4, threads_per_worker = 1, memory_limit = '4GB')

client

#Read data from .csv

dataframe_lazy = df.read_csv(path, engine = 'c', low_memory = False)

dataframe = dataframe_lazy.compute()

#Get my X and Y values and realse the original DF from memory

X_values = dataframe.drop(columns = ['Id', 'Target'])

Y_values = dataframe['Target']

del dataframe

#Prepare data

X_values.fillna(0, inplace = True)

#Fire up the Joblib backend with Dask:

with joblib.parallel_backend('dask'):

model_RFE = RFE(estimator = DecisionTreeClassifier(), n_features_to_select = 5)

fit_RFE = model_RFE.fit(X_values,Y_values)