I am using strand to serialize certain processing within some objects. However, when the object dies, the strand element somehow refuses go away. Like a soul in purgatory, it manages to live in memory and causes memory usage to increase over days. I have managed to replicate the problem in a sample code.

I am creating 5 families, each with one parent and a child. The parent object contains the child and a strand object to ensure the processing happens in serial fashion. Each family is issued 3 processing tasks and they do it in the right order irrespective of the thread they run on. I am taking memory heap snapshot in VC++ before and after the object creation and processing. The snapshot comparison shows that the strand alone manages to live even after Parent and Child object have been destroyed.

How do I ensure strand object is destroyed? Unlike the sample program, my application runs for years without shutdown. I will be stuck with millions of zombie strand objects within a month.

#include <boost/thread.hpp>

#include <boost/shared_ptr.hpp>

#include <boost/asio/strand.hpp>

#include <boost/enable_shared_from_this.hpp>

#include <boost/noncopyable.hpp>

#include <boost/asio/io_service.hpp>

#include <boost/bind.hpp>

#include <boost/make_shared.hpp>

#include <boost/asio/yield.hpp>

#include <boost/log/attributes/current_thread_id.hpp>

#include <iostream>

boost::mutex mtx;

class Child : public boost::noncopyable, public boost::enable_shared_from_this<Child>

{

int _id;

public:

Child(int id) : _id(id) {}

void process(int order)

{

boost::this_thread::sleep_for(boost::chrono::seconds(2));

boost::lock_guard<boost::mutex> lock(mtx);

std::cout << "Family " << _id << " processing order " << order << " in thread " << std::hex << boost::this_thread::get_id() << std::endl;

}

};

class Parent : public boost::noncopyable, public boost::enable_shared_from_this<Parent>

{

boost::shared_ptr<Child> _child;

boost::asio::io_service::strand _strand;

public:

Parent(boost::asio::io_service& ioS, int id) : _strand(ioS)

{

_child = boost::make_shared<Child>(id);

}

void process()

{

for (int order = 1; order <= 3; order++)

{

_strand.post(boost::bind(&Child::process, _child, order));

}

}

};

int main(int argc, char* argv[])

{

boost::asio::io_service ioS;

boost::thread_group threadPool;

boost::asio::io_service::work work(ioS);

int noOfCores = boost::thread::hardware_concurrency();

for (int i = 0; i < noOfCores; i++)

{

threadPool.create_thread(boost::bind(&boost::asio::io_service::run, &ioS));

}

std::cout << "Take the first snapshot" << std::endl;

boost::this_thread::sleep_for(boost::chrono::seconds(10));

std::cout << "Creating families" << std::endl;

for (int family = 1; family <= 5; family++)

{

auto obj = boost::make_shared<Parent>(ioS,family);

obj->process();

}

std::cout << "Take the second snapshot after all orders are processed" << std::endl;

boost::this_thread::sleep_for(boost::chrono::seconds(60));

return 0;

}

The output looks like this:

Take the first snapshot

Creating families

Take the second snapshot after all orders are processed

Family 3 processing order 1 in thread 50c8

Family 1 processing order 1 in thread 5e38

Family 4 processing order 1 in thread a0c

Family 5 processing order 1 in thread 47e8

Family 2 processing order 1 in thread 5f94

Family 3 processing order 2 in thread 46ac

Family 2 processing order 2 in thread 47e8

Family 5 processing order 2 in thread a0c

Family 1 processing order 2 in thread 50c8

Family 4 processing order 2 in thread 5e38

Family 2 processing order 3 in thread 47e8

Family 4 processing order 3 in thread 5e38

Family 1 processing order 3 in thread 50c8

Family 5 processing order 3 in thread a0c

Family 3 processing order 3 in thread 46ac

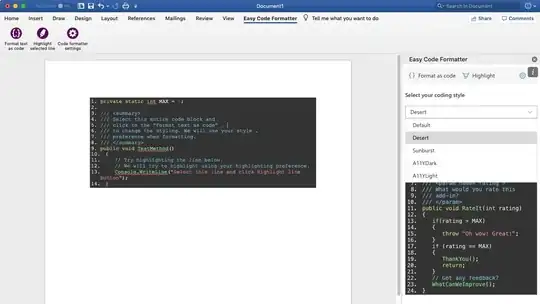

I took the first heap snapshot before creating families. I took the second snapshot few seconds after all the 15 lines were printed (5 familes X 3 tasks). The heap comparison shows the following:

All the Parent and Child objects have gone away, but all the 5 strand objects lives on...

Edit: For those who don't understand shared_ptr, the objects don't die at the end of the loop. Since the reference of the child has been passed to 3 process tasks, at least child live a charmed life until all the tasks are completed for a given family. Once all references are cleared, Child object will die.