Hello thanks in advance for all answers, I really appreciate community help

Here is my dataframe - from a csv containing scraped data from cars classified ads

Unnamed: 0 NameYear \

0 0 BMW 7 серия, 2007

1 1 BMW X3, 2021

2 2 BMW 2 серия Gran Coupe, 2021

3 3 BMW X5, 2021

4 4 BMW X1, 2021

Price \

0 520 000 ₽

1 от 4 810 000 ₽\n4 960 000 ₽ без скидки

2 2 560 000 ₽

3 от 9 259 800 ₽\n9 974 800 ₽ без скидки

4 от 3 130 000 ₽\n3 220 000 ₽ без скидки

CarParams \

0 187 000 км, AT (445 л.с.), седан, задний, бензин

1 2.0 AT (190 л.с.), внедорожник, полный, дизель

2 1.5 AMT (140 л.с.), седан, передний, бензин

3 3.0 AT (400 л.с.), внедорожник, полный, дизель

4 2.0 AT (192 л.с.), внедорожник, полный, бензин

url

0 https://www.avito.ru/moskva/avtomobili/bmw_7_s...

1 https://www.avito.ru/moskva/avtomobili/bmw_x3_...

2 https://www.avito.ru/moskva/avtomobili/bmw_2_s...

3 https://www.avito.ru/moskva/avtomobili/bmw_x5_...

4 https://www.avito.ru/moskva/avtomobili/bmw_x1_...

- THE TASK - I want to know if there are duplicate rows, or if the SAME car advertisement appears twice. Most reliable maybe url because it should be unique: CarParameters or NameYear can repeat so I will check nunique and duplicated on url column

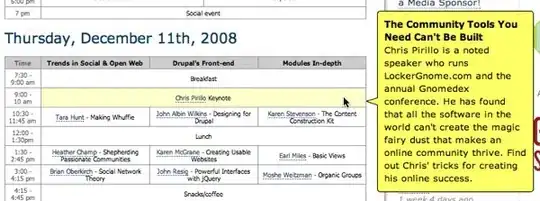

screenshot to visually inspect the reslt of duplicated:

- THE ISSUE: Visual inspection (sorry for unprofessional jargon) shows these urls are not the SAME, but I wanted to get possible exactly same urls to check for repeat data. I tried to set keep = False as well