Original question (check update in next section)

I would like to download files that are produced by multiple jobs into one folder on azure pipelines. Here is a schema of what I'd like to accomplish:

jobs:

- job: job1

pool: {vmImage: 'Ubuntu-16.04'}

steps:

- bash: |

printf "Hello form job1\n" > $(Pipeline.Workspace)/file.1

- task: PublishPipelineArtifact@1

inputs:

targetPath: $(Pipeline.Workspace)/file.1

- job: job2

pool: {vmImage: 'Ubuntu-16.04'}

steps:

- bash: |

printf "Hello form job2\n" > $(Pipeline.Workspace)/file.2

- task: PublishPipelineArtifact@1

inputs:

targetPath: $(Pipeline.Workspace)/file.2

- job: check_prev_jobs

dependsOn: "all other jobs"

pool: {vmImage: 'Ubuntu-16.04'}

steps:

- bash: |

mkdir -p $(Pipeline.Workspace)/previous_artifacts

- task: DownloadPipelineArtifact@2

inputs:

source: current

path: $(Pipeline.Workspace)/previous_artifacts

Where the directory $(Pipeline.Workspace)/previous_artifacts only contains file.1 and file.2 and does not have directories job1 and job2 that contain /file.1 and /file.2 respectively.

Thanks!

Update

Using @Yujun Ding-MSFT's answer. I created the following azure-pipelines.yml file:

stages:

- stage: generate

jobs:

- job: Job_1

displayName: job1

pool:

vmImage: ubuntu-20.04

variables:

JOB_NAME: $(Agent.JobName)

DIR: $(Pipeline.Workspace)/$(JOB_NAME)

steps:

- checkout: self

- bash: |

mkdir -p $DIR

cd $DIR

printf "Time form job1\n" > $JOB_NAME.time

printf "Hash form job1\n" > $JOB_NAME.hash

printf "Raw form job1\n" > $JOB_NAME.raw

printf "Nonesense form job1\n" > $JOB_NAME.nonesense

displayName: Generate files

- task: PublishPipelineArtifact@1

displayName: Publish Pipeline Artifact

inputs:

path: $(DIR)

artifactName: job1

- job: Job_2

displayName: job2

pool:

vmImage: ubuntu-20.04

variables:

JOB_NAME: $(Agent.JobName)

DIR: $(Pipeline.Workspace)/$(JOB_NAME)

steps:

- checkout: self

- bash: |

mkdir -p $DIR

cd $DIR

printf "Time form job2\n" > $JOB_NAME.time

printf "Hash form job2\n" > $JOB_NAME.hash

printf "Raw form job2\n" > $JOB_NAME.raw

printf "Nonesense form job2\n" > $JOB_NAME.nonesense

displayName: Generate files

- task: PublishPipelineArtifact@1

displayName: Publish Pipeline Artifact copy

inputs:

path: $(DIR)

artifactName: job2

- stage: analyze

jobs:

- job: download_display

displayName: Download and display

pool:

vmImage: ubuntu-20.04

variables:

DIR: $(Pipeline.Workspace)/artifacts

steps:

- checkout: self

- bash: |

mkdir -p $DIR

- task: DownloadPipelineArtifact@2

displayName: Download Pipeline Artifact

inputs:

path: $(DIR)

patterns: '**/*.time'

- bash: |

ls -lR $DIR

cd $DIR

displayName: Check dir content

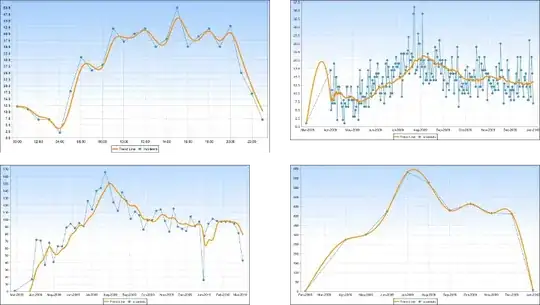

However, as shown on the screenshot below, I still get each .time file in a separate job-related directory:

Unfortunately, it seems to me that what I would like may not possible with Pipeline.Artifacts as explained in this Microsoft discussion. This would be a bummer given that Build.Artifacts are deprecated at this point.