I know that in Convolution layers the kernel size needs to be a multiplication of stride or else it will produce artefacts in gradient calculations like the checkerboard problem. Now does it also work like that in Pooling layers? I read somewhere that max pooling can also cause problems like that. Take this line in the discriminator for example:

self.downsample = nn.AvgPool2d(3, stride=2, padding=1, count_include_pad=False)

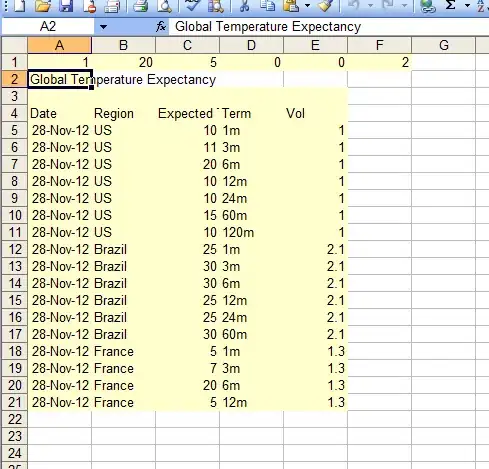

I have a model (MUNIT) with it, and this is the image it produced:

It looks like the checkerboard problem, or at least a gradient problem but I checked my Convolution layers and didn't found the error described above. They all are of size 4 with stride 2 or an uneven size with stride of 1.