The solution you are asking for is too complex to be solved by one function or particular algorithm. In fact, the problem could be broken down into smaller steps, each with their own algorithms and solutions. Instead of offering you a free, complete, copy-paste solution, I'll give you a general outline of the problem and post part of the solution I'd design. These are the steps I propose:

Identify and extract all the arrow blobs from the image, and process them one by one.

Try to find the end-points of the arrow. That is end and starting point (or "tail" and "tip")

Undo the rotation, so you have straightened arrows always, no matter their angle.

After this, the arrows will always point to one direction. This normalization let's itself easily for classification.

After processing, you can pass the image to a Knn classifier, a Support Vector Machine or even (if you are willing to call the "big guns" on this problem) a CNN (in which case, you probably won't need to undo the rotation - as long as you have enough training samples). You don't even have to compute features, as passing the raw image to a SVM would be probably enough. However, you need more than one training sample for each arrow class.

Alright, let's see. First, let's extract each arrow from the input. This is done using cv2.findCountours, this part is very straightforward:

# Imports:

import cv2

import math

import numpy as np

# image path

path = "D://opencvImages//"

fileName = "arrows.png"

# Reading an image in default mode:

inputImage = cv2.imread(path + fileName)

# Grayscale conversion:

grayscaleImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

grayscaleImage = 255 - grayscaleImage

# Find the big contours/blobs on the binary image:

contours, hierarchy = cv2.findContours(grayscaleImage, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE)

Now, let's check out the contours and process them one by one. Let's compute a (non-rotated) bounding box of the arrow and crop that sub-image. Now, note that some noise could come up. In which case, we won't be processing that blob. I apply an area filter to bypass blobs of small area. Like this:

# Process each contour 1-1:

for i, c in enumerate(contours):

# Approximate the contour to a polygon:

contoursPoly = cv2.approxPolyDP(c, 3, True)

# Convert the polygon to a bounding rectangle:

boundRect = cv2.boundingRect(contoursPoly)

# Get the bounding rect's data:

rectX = boundRect[0]

rectY = boundRect[1]

rectWidth = boundRect[2]

rectHeight = boundRect[3]

# Get the rect's area:

rectArea = rectWidth * rectHeight

minBlobArea = 100

We set a minBlobArea and process that contour. Crop the image if the contour is above that area threshold value:

# Check if blob is above min area:

if rectArea > minBlobArea:

# Crop the roi:

croppedImg = grayscaleImage[rectY:rectY + rectHeight, rectX:rectX + rectWidth]

# Extend the borders for the skeleton:

borderSize = 5

croppedImg = cv2.copyMakeBorder(croppedImg, borderSize, borderSize, borderSize, borderSize, cv2.BORDER_CONSTANT)

# Store a deep copy of the crop for results:

grayscaleImageCopy = cv2.cvtColor(croppedImg, cv2.COLOR_GRAY2BGR)

# Compute the skeleton:

skeleton = cv2.ximgproc.thinning(croppedImg, None, 1)

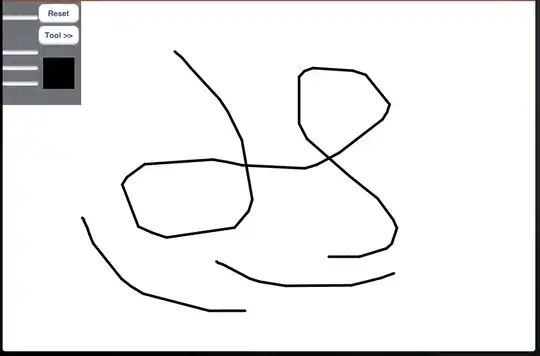

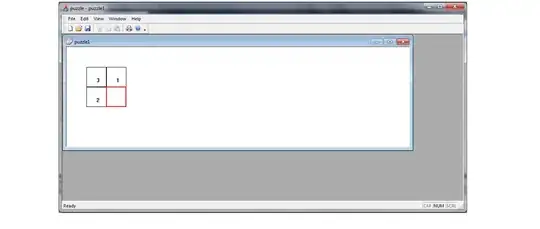

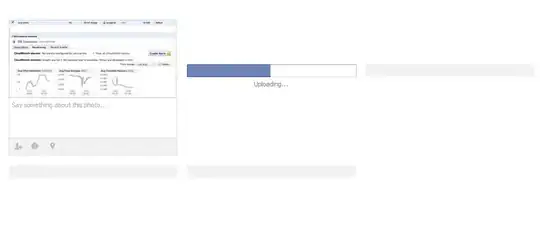

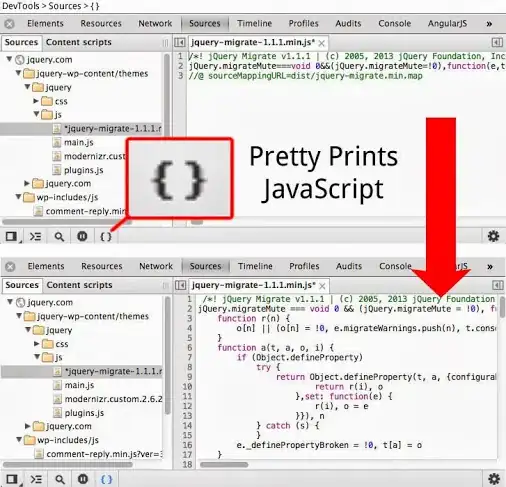

There are some couple of things going on here. After I crop the ROI of the current arrow, I extend borders on that image. I store a deep-copy of this image for further processing and, lastly, I compute the skeleton. The border-extending is done prior to skeletonizing because the algorithm produces artifacts if the contour is too close to the image limits. Padding the image in all directions prevents these artifacts. The skeleton is needed for the way I'm finding ending and starting points of the arrow. More of this latter, this is the first arrow cropped and padded:

This is the skeleton:

Note that the "thickness" of the contour is normalized to 1 pixel. That's cool, because that's what I need for the following processing step: Finding start/ending points. This is done by applying a convolution with a kernel designed to identify one-pixel wide end-points on a binary image. Refer to this post for the specifics. We will prepare the kernel and use cv2.filter2d to get the convolution:

# Threshold the image so that white pixels get a value of 0 and

# black pixels a value of 10:

_, binaryImage = cv2.threshold(skeleton, 128, 10, cv2.THRESH_BINARY)

# Set the end-points kernel:

h = np.array([[1, 1, 1],

[1, 10, 1],

[1, 1, 1]])

# Convolve the image with the kernel:

imgFiltered = cv2.filter2D(binaryImage, -1, h)

# Extract only the end-points pixels, those with

# an intensity value of 110:

binaryImage = np.where(imgFiltered == 110, 255, 0)

# The above operation converted the image to 32-bit float,

# convert back to 8-bit uint

binaryImage = binaryImage.astype(np.uint8)

After the convolution, all end-points have a value of 110. Setting these pixels to 255, while the rest are set to black, yields the following image (after proper conversion):

Those tiny pixels correspond to the "tail" and "tip" of the arrow. Notice there's more than one point per "Arrow section". This is because the end-points of the arrow do not perfectly end in one pixel. In the case of the tip, for example, there will be more end-points than in the tail. This is a characteristic we will exploit latter. Now, pay attention to this. There are multiple end-points but we only need an starting point and an ending point. I'm gonna use K-Means to group the points in two clusters.

Using K-means will also let me identify which end-points belong to the tail and which to the tip, so I'll always know the direction of the arrow. Let's roll:

# Find the X, Y location of all the end-points

# pixels:

Y, X = binaryImage.nonzero()

# Check if I got points on my arrays:

if len(X) > 0 or len(Y) > 0:

# Reshape the arrays for K-means

Y = Y.reshape(-1,1)

X = X.reshape(-1,1)

Z = np.hstack((X, Y))

# K-means operates on 32-bit float data:

floatPoints = np.float32(Z)

# Set the convergence criteria and call K-means:

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 10, 1.0)

_, label, center = cv2.kmeans(floatPoints, 2, None, criteria, 10, cv2.KMEANS_RANDOM_CENTERS)

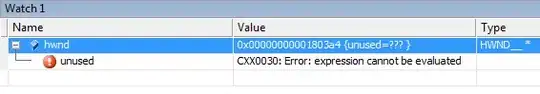

Be careful with the data types. If I print the label and center matrices, I get this (for the first arrow):

Center:

[[ 6. 102. ]

[104. 20.5]]

Labels:

[[1]

[1]

[0]]

center tells me the center (x,y) of each cluster – That is the two points I was originally looking for. label tells me on which cluster the original data falls in. As you see, there were originally 3 points. 2 of those points (the points belonging to the tip of the arrow) area assigned to cluster 1, while the remaining end-point (the arrow tail) is assigned to cluster 0. In the centers matrix the centers are ordered by cluster number. That is – first center is that one of cluster 0, while second cluster is the center of cluster 1. Using this info I can easily look for the cluster that groups the majority of points - that will be the tip of the arrow, while the remaining will be the tail:

# Set the cluster count, find the points belonging

# to cluster 0 and cluster 1:

cluster1Count = np.count_nonzero(label)

cluster0Count = np.shape(label)[0] - cluster1Count

# Look for the cluster of max number of points

# That cluster will be the tip of the arrow:

maxCluster = 0

if cluster1Count > cluster0Count:

maxCluster = 1

# Check out the centers of each cluster:

matRows, matCols = center.shape

# We need at least 2 points for this operation:

if matCols >= 2:

# Store the ordered end-points here:

orderedPoints = [None] * 2

# Let's identify and draw the two end-points

# of the arrow:

for b in range(matRows):

# Get cluster center:

pointX = int(center[b][0])

pointY = int(center[b][1])

# Get the "tip"

if b == maxCluster:

color = (0, 0, 255)

orderedPoints[0] = (pointX, pointY)

# Get the "tail"

else:

color = (255, 0, 0)

orderedPoints[1] = (pointX, pointY)

# Draw it:

cv2.circle(grayscaleImageCopy, (pointX, pointY), 3, color, -1)

cv2.imshow("End Points", grayscaleImageCopy)

cv2.waitKey(0)

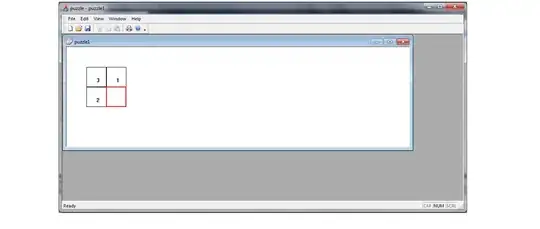

This is the result; the tip of the end-point of the arrow will always be in red and the end-point for the tail in blue:

Now, we know the direction of the arrow, let's compute the angle. I will measure this angle from 0 to 360. The angle will always be the one between the horizon line and the tip. So, we manually compute the angle:

# Store the tip and tail points:

p0x = orderedPoints[1][0]

p0y = orderedPoints[1][1]

p1x = orderedPoints[0][0]

p1y = orderedPoints[0][1]

# Compute the sides of the triangle:

adjacentSide = p1x - p0x

oppositeSide = p0y - p1y

# Compute the angle alpha:

alpha = math.degrees(math.atan(oppositeSide / adjacentSide))

# Adjust angle to be in [0,360]:

if adjacentSide < 0 < oppositeSide:

alpha = 180 + alpha

else:

if adjacentSide < 0 and oppositeSide < 0:

alpha = 270 + alpha

else:

if adjacentSide > 0 > oppositeSide:

alpha = 360 + alpha

Now you have the angle, and this angle is always measured between the same references. That's cool, we can undo the rotation of the original image like follows:

# Deep copy for rotation (if needed):

rotatedImg = croppedImg.copy()

# Undo rotation while padding output image:

rotatedImg = rotateBound(rotatedImg, alpha)

cv2. imshow("rotatedImg", rotatedImg)

cv2.waitKey(0)

else:

print( "K-Means did not return enough points, skipping..." )

else:

print( "Did not find enough end points on image, skipping..." )

This yields the following result:

The arrow will always point top the right regardless of its original angle. Use this as normalization for a batch of training images, if you want to classify each arrow in its own class.

Now, you noticed that I used a function to rotate the image: rotateBound. This function is taken from here. This functions correctly pads the image after rotation, so you do not end up with a rotated image that is cropped incorrectly.

This is the definition and implementation of rotateBound:

def rotateBound(image, angle):

# grab the dimensions of the image and then determine the

# center

(h, w) = image.shape[:2]

(cX, cY) = (w // 2, h // 2)

# grab the rotation matrix (applying the negative of the

# angle to rotate clockwise), then grab the sine and cosine

# (i.e., the rotation components of the matrix)

M = cv2.getRotationMatrix2D((cX, cY), -angle, 1.0)

cos = np.abs(M[0, 0])

sin = np.abs(M[0, 1])

# compute the new bounding dimensions of the image

nW = int((h * sin) + (w * cos))

nH = int((h * cos) + (w * sin))

# adjust the rotation matrix to take into account translation

M[0, 2] += (nW / 2) - cX

M[1, 2] += (nH / 2) - cY

# perform the actual rotation and return the image

return cv2.warpAffine(image, M, (nW, nH))

These are results for the rest of your arrows. The tip (always in red), the tail (always in blue) and their "projective normalization" - always pointing to the right:

What remains is collect samples of your different arrow classes, set up a classifier, train it with your samples and test it with the straightened image coming from the last processing block we examined.

Some remarks: Some arrows, like the one that is not filled, failed the end-point identification part, thus, not yielding enough points for clustering. That arrow is by-passed by the algorithm. The problem is tougher than initially though, right? I recommend doing some research on the topic, because not matter how "easy" the task seems, at the end, it will be performed by an automated "smart" system. And those systems aren't really that smart at the end of the day.