I want to project an image from spherical to cubemap. From what I understood studying maths, I need to create a theta, phi distribution for each pixel and then convert it into cartesian system to get a normalized pixel map.

I used the following code to do so

theta = 0

phi = np.pi/2

squareLength = 2048

# theta phi distribution for X-positive face

t = np.linspace(theta + np.pi/4, theta - np.pi/4, squareLength)

p = np.linspace(phi + np.pi/4, phi - np.pi/4, squareLength)

x, y = np.meshgrid(t, p)

# converting into cartesion sytem for X-positive face (where r is the distance from sphere center to cube plane and X is constantly 0.5 in cartesian system)

X = np.zeros_like(y)

X[:,:] = 0.5

r = X / (np.cos(x) * np.sin(y))

Y = r * np.sin(x) * np.sin(y)

Z = r * np.cos(y)

XYZ = np.stack((X, Y, Z), axis=2)

# shifting pixels from the negative side

XYZ = XYZ + [0, 0.5, 0.5]

# since i want to project on X-positive face my map should be

x_map = -XYZ[:, :, 1] * squareLength

y_map = XYZ[:,:, 2] * squareLength

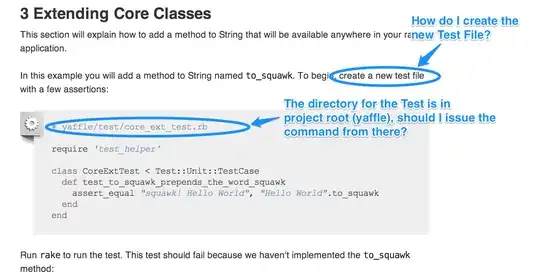

The above map created should give me my desired result with cv2.remap() but it's not. Then I tried looping through pixels and implement my own remap without interpolation or extrapolation. With some hit and trial, I deduced the following formula which gives me the correct result

for i in range(2048):

for j in range(2048):

try:

image[int(y_map[i,j]), int(x_map[i,j])] = im[i, j]

except:

pass

which is reverse of actual cv2 remapping which says dst(x,y)=src(mapx(x,y),mapy(x,y))

I do not understand if did the math all wrong or is there a way to covert x_map and y_map to correct forms so that cv2.remap() gives the desired result.

DESIRED RESULT (this one is without interpolation using loops)