I am trying to load a table from Sqlserver database to Bigquery which is of size 27gb, 218million rows and 28columns.

The source table doesn't have any column that contain unique values inorder for Spark to partition the incoming data evenly. So I applied row_number() to the data I am reading as below:

spark = SparkSession.builder.appName('Read_from_Source').getOrCreate()

dataframe = spark.read.format('jdbc').option('url', URL).option('driver', 'con.microsoft.sqlserver.jdbc.SQLServerDriver).

option('user', user).

option('password', password).

option('dbtable', f'select *, row_number() over(ORDER BY (SELECT 1)) as row_num from {tablename}) as temp_load').

option('partitionColumn',row_num).

option('numPartitions', 400).

option('lowerBound', 1).

option('upperBound', countOfNumOfRows).

load()

dataframe.write.format('bigquery').option('table','tablename').mode('overwrite').save()

I gave the below configuration to the job:

> spark.submit.deployMode = Cluster

> spark.executor.instances=4

> spark.execuor.memory=2g spark.executor.cores=4 spark.driver.memory=2g

> spark.network.timeout=240

When I submit the job, it starts well but after some time if fails with the an exception connection reset which can be seen below.

Caused by: java.net.SocketException: Connection reset

at java.net.SocketInputStream.read(SocketInputStream.java:210)

at java.net.SocketInputStream.read(SocketInputStream.java:141)

at com.microsoft.sqlserver.jdbc.TDSChannel.read(IOBuffer.java:1981)

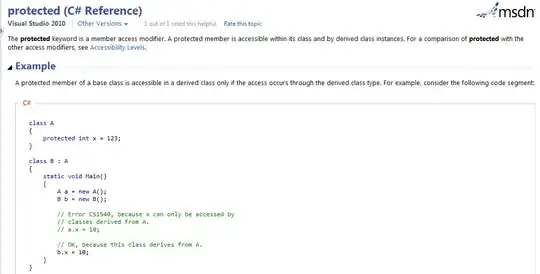

The executors and the stage could be seen below. Executors enter image description here

Stages of my job (There is only one stage since it is just read and load):

I thought the connection was breaking/interrupting while the data is being read and hence the connection reset exception. So I tried to increase spark.network.timeout to 100000 and increased the numPartitions from 200 to 400. The error is still the same.

I also increased the executor instances to 6 (I have 2 worker nodes) and executor memory to 6gb, driver memory to 4gb. I still see the same issue.

I am applying partitioning here while reading the data in order to avoid data skew. The memory given is also very high.

Edit1: There is no data going to driver here since the given lines is the only code I am running.

Is there anything wrong with the way I framed the code ? Can anyone suggest me a fix to this exception ?