I have a dataset with 5 classes, and below there is the number of images in each class:

Class1: 6427 Images

Class2: 12678 Images

Class3: 9936 Images

Class 4: 26077 Images

Class 5: 1635 Images

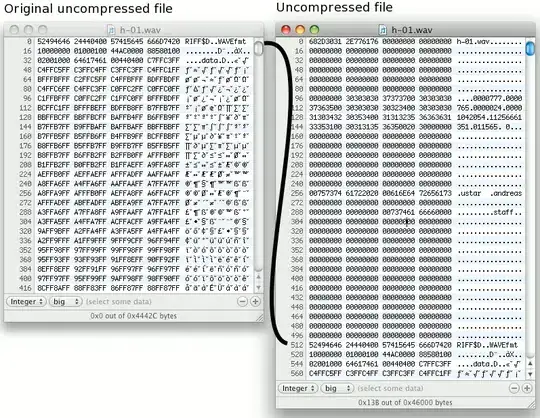

I run my first CNN model on this dataset and my model was overfitted as can be seen below:

The overfitting is obvious in these models, also the high sensitivity on label4. I have tried different ways like Augmentation and etc to fix this and finally I fixed it by using VGG16 model. But I still have the class imbalance, but the strange thing is that the class imbalance now is shifted to label 1 as can be seen below.

In order to fix the class imbalance I choose equal number of each class for example I choose 1600 images from each class and I ran my model on that but again I have high sensitivity on label1. I do not know what is the problem. I would like to mention that when I add Dropout layer I receive the result below for the confusion matrix which is very bad:

I add my first model which I get overfitting and high sensitivity on label 4 below:

os.chdir('.')

train_path = './train'

test_path = './test'

valid_path = './val'

train_batches = ImageDataGenerator(preprocessing_function=tf.keras.applications.vgg16.preprocess_input) \

.flow_from_directory(directory=train_path, target_size=(224,224), classes=['label1', 'label2','label3','label4','label5'], batch_size=32)

test_batches = ImageDataGenerator(preprocessing_function=tf.keras.applications.vgg16.preprocess_input) \

.flow_from_directory(directory=test_path, target_size=(224,224), classes=['label1', 'label2','label3','label4','label5'], batch_size=32)

valid_batches = ImageDataGenerator(preprocessing_function=tf.keras.applications.vgg16.preprocess_input) \

.flow_from_directory(directory=valid_path, target_size=(224,224), classes=['label1', 'label2','label3','label4','label5'], batch_size=32)

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Conv2D(filters=32, kernel_size=(3,3), activation='relu', padding= 'same', input_shape=(224,224,3)))

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2), strides=2))

model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3,3),activation= 'relu', padding='same'))

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2), strides=2))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(5, activation='softmax'))

print(model.summary())

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.0001), loss='categorical_crossentropy', metrics=['accuracy'])

history = model.fit(x=train_batches, validation_data= valid_batches, epochs=10)

predictions = model.predict(x=test_batches, verbose=0)