I am trying to compute the contrast around each pixel in an NxN window and saving the results in a new image where each pixel in the new image is the contrast of the area around it in the old image. From another post I got this:

1) Convert the image to say LAB and get the L channel

2) Compute the max for an NxN neighborhood around each pixel

3) Compute the min for an NxN neighborhood around each pixel

4) Compute the contrast from the equation above at each pixel.

5) Insert the contrast as a pixel value in new image.

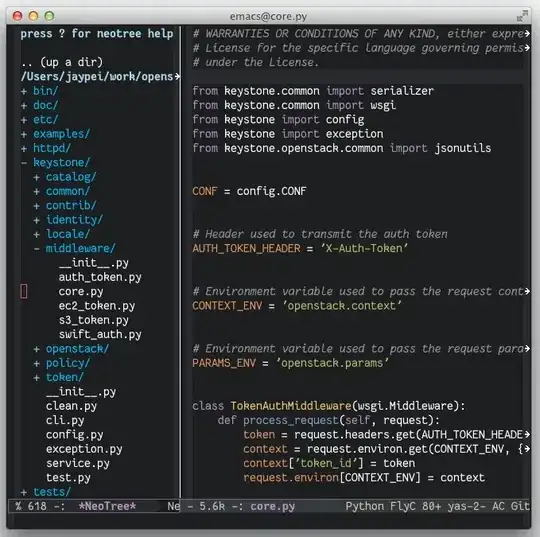

Currently I have the following:

def cmap(roi):

max = roi.reshape((roi.shape[0] * roi.shape[1], 3)).max(axis=0)

min = roi.reshape((roi.shape[0] * roi.shape[1], 3)).min(axis=0)

contrast = (max - min) / (max + min)

return contrast

def cm(img):

# convert to LAB color space

lab = cv2.cvtColor(img, cv2.COLOR_BGR2LAB)

# separate channels

L, A, B = cv2.split(lab)

img_shape = L.shape

size = 5

shape = (L.shape[0] - size + 1, L.shape[1] - size + 1, size, size)

strides = 2 * L.strides

patches = np.lib.stride_tricks.as_strided(L, shape=shape, strides=strides)

patches = patches.reshape(-1, size, size)

output_img = np.array([cmap(roi) for roi in patches])

cv2.imwrite("labtest.png", output_img)

The code complains about the size of roi. Is there a better (pythonic) way of doing what I want?