I have a use case where we need to stream Open Source Delta table into multiple queries, filtered on one of the partitioned column. Eg,. Given Delta-table partitioned on year column.

Streaming query 1

spark.readStream.format("delta").load("/tmp/delta-table/").

where("year= 2013")

Streaming query 2

spark.readStream.format("delta").load("/tmp/delta-table/").

where("year= 2014")

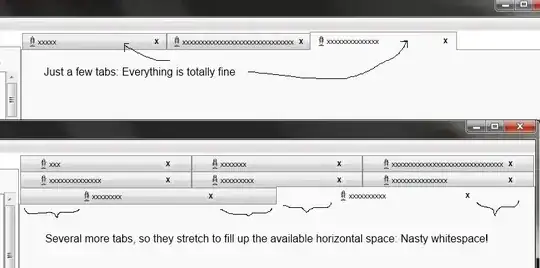

The physical plan shows filter after the streaming.

> == Physical Plan == Filter (isnotnull(year#431) AND (year#431 = 2013))

> +- StreamingRelation delta, []

My question is does pushdown predicate works with Streaming queries in Delta? Can we stream only specific partition from the Delta?