I am having big speed troubles training YOLOv5 into my p2.xlarge aws ec2 instance which has a NVIDIA Tesla K80.

I realized the training process was even slower than my desktop PC who has a NVIDIA RTX 2060. So I decided to inference over some images and these were the results:

So I decided to try a p2.8xlarge instance to train my deep learning model and the results were similars, hence I inferenced over the same images and my surprise was I got similar results.

It is important to remember that this p2.8xlarge instance has 488 MB of memory RAM and 32 vCPU cores and 8 Tesla K80, so my question is: How is this p2.8xlarge even slower training YOLO than my PC Desktop with just 64 MB of memory RAM and 16 cores?

Has anyone had these same problems? Any solution or some tip you can give me please?

At the end I trained the model over my PC, but it took too much time. On the other hand, cloud environments are supposed to solve these problems.

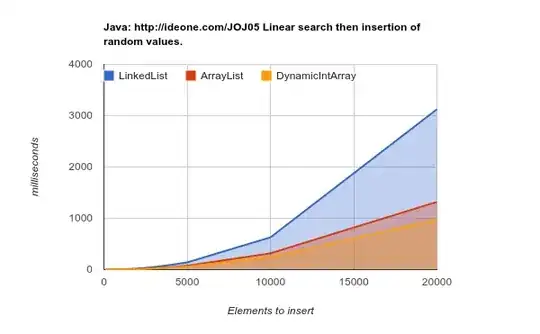

It seems I am not the only guy who happens this: