The issue

I am trying to optimise some calculations which lend themselves to so-called embarrassingly parallel calculations, but I am finding that using python's multiprocessing package actually slows things down.

My question is: am I doing something wrong, or is there an intrinsic reason why parallelisation actually slows things down? Is it because I am using numba? Would other packages like joblib or dak make much of a difference?

There are loads of similar questions, in which the answer is always that the overhead costs more than the time savings, but all those questions tend to revolve around very simple functions, whereas I would have expected something with nested loops to lend itself better to parallelisation. I have also not found comparisons among joblib, multiprocessing and dask.

My function

I have a function which takes a one-dimensional numpy array as argument of shape n, and outputs a numpy array of shape (n x t), where each row is independent, i.e. row 0 of the output depends only on item 0 of the input, row 1 on item 1, etc. Something like this:

The underlying calculation is optimised with numba , which speeds things up by various orders of magnitude.

Toy example - results

I cannot share the exact code, so I have come up with a toy example. The calculation defined in my_fun_numba is actually irrelevant, it's just some very banal number crunching to keep the CPU busy.

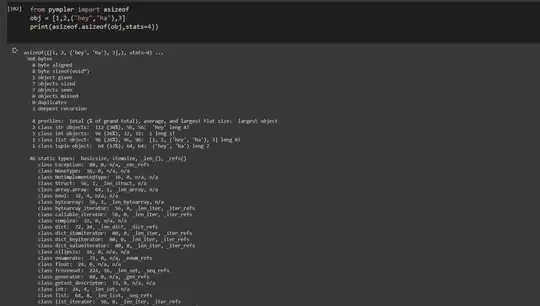

With the toy example, the results on my PC are these, and they are very similar to what I get with my actual code.

As you can see, splitting the input array into different chunks and sending each of them to multiprocessing.pool actually slows things down vs just using numba on a single core.

What I have tried

I have tried various combinations of the cache and nogil options in the numba.jit decorator, but the difference is minimal.

I have profiled the code (not the timeit.Timer part, just a single run) with PyCharm and, if I understand the output correctly, it seems most of the time is spent waiting for the pool.

Sorted by time:

Sorted by own time:

Toy example - the code

import numpy as np

import pandas as pd

import multiprocessing

from multiprocessing import Pool

import numba

import timeit

@numba.jit(nopython = True, nogil = True, cache = True)

def my_fun_numba(x):

dim2 = 10

out = np.empty((len(x), dim2))

n = len(x)

for r in range(n):

for c in range(dim2):

out[r,c] = np.cos(x[r]) ** 2 + np.sin(x[r]) ** 2

return out

def my_fun_non_numba(x):

dim2 = 10

out = np.empty((len(x), dim2))

n = len(x)

for r in range(n):

for c in range(dim2):

out[r,c] = np.cos(x[r]) ** 2 + np.sin(x[r]) ** 2

return out

def my_func_parallel(inp, func, cpus = None):

if cpus == None:

cpus = max(1, multiprocessing.cpu_count() - 1)

else:

cpus = cpus

inp_split = np.array_split(inp,cpus)

pool = Pool(cpus)

out = np.vstack(pool.map(func, inp_split) )

pool.close()

pool.join()

return out

if __name__ == "__main__":

inputs = np.array([100,10e3,1e6] ).astype(int)

res = pd.DataFrame(index = inputs, columns =['no paral, no numba','no paral, numba','numba 6 cores','numba 12 cores'])

r = 3

n = 1

for i in inputs:

my_arg = np.arange(0,i)

res.loc[i, 'no paral, no numba'] = min(

timeit.Timer("my_fun_non_numba(my_arg)", globals=globals()).repeat(repeat=r, number=n)

)

res.loc[i, 'no paral, numba'] = min(

timeit.Timer("my_fun_numba(my_arg)", globals=globals()).repeat(repeat=r, number=n)

)

res.loc[i, 'numba 6 cores'] = min(

timeit.Timer("my_func_parallel(my_arg, my_fun_numba, cpus = 6)", globals=globals()).repeat(repeat=r, number=n)

)

res.loc[i, 'numba 12 cores'] = min(

timeit.Timer("my_func_parallel(my_arg, my_fun_numba, cpus = 12)", globals=globals()).repeat(repeat=r, number=n)

)