here I want to ask to you, what's the difference between running the gunicorn uvicorn with python, and default from tiangolo?

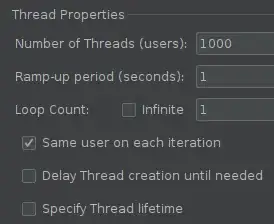

I have tried to stress testing these using JMeter with thread properties:

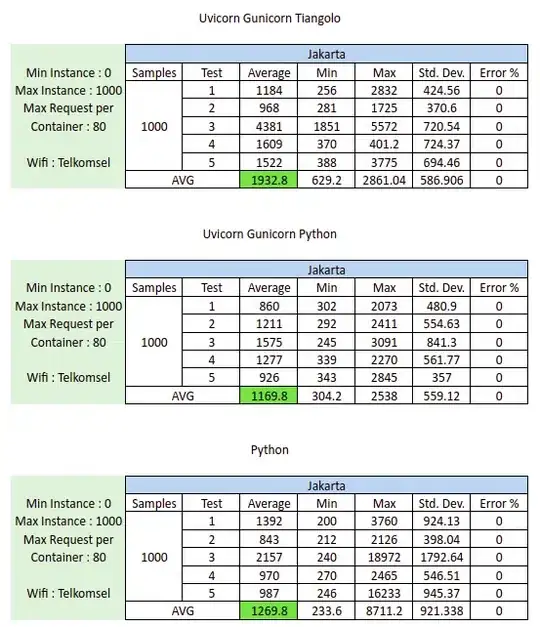

From these, I got the result::

From above I have tried:

- Dockerfile with tiangolo base

- Dockerfile with python:3.8-slim-buster and run it with gunicorn command

- Dockerfile with python:3.8-slim-buster and run it with python

This is my Dockerfile for case 1 (Tiangolo base):

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.8-slim

RUN apt-get update && apt-get install wget gcc -y

RUN mkdir -p /app

WORKDIR /app

COPY ./requirements.txt /app/requirements.txt

RUN python -m pip install --upgrade pip

RUN pip install --no-cache-dir -r /app/requirements.txt

COPY . /app

This is my Dockerfile for case 2 (Python base with gunicorn command):

FROM python:3.8-slim-buster as builder

RUN apt-get update --fix-missing

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y libgl1-mesa-dev python3-pip git

RUN mkdir /usr/src/app

WORKDIR /usr/src/app

COPY ./requirements.txt /usr/src/app/requirements.txt

RUN pip3 install -U setuptools

RUN pip3 install --upgrade pip

RUN pip3 install -r ./requirements.txt

COPY . /usr/src/app

ENTRYPOINT gunicorn --bind :8080 --workers 1 --threads 8 main:app --worker-class uvicorn.workers.UvicornH11Worker --preload --timeout 60 --worker-tmp-dir /dev/shm

This is my Dockerfile for case 3 (Python base with python command):

FROM python:3.8-slim-buster

RUN apt-get update --fix-missing

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y libgl1-mesa-dev python3-pip git

RUN mkdir /usr/src/app

WORKDIR /usr/src/app

COPY ./requirements.txt /usr/src/app/requirements.txt

RUN pip3 install -U setuptools

RUN pip3 install --upgrade pip

RUN pip3 install -r ./requirements.txt --use-feature=2020-resolver

COPY . /usr/src/app

CMD ["python3", "/usr/src/app/main.py"]

Here I am confused, from the results above it looks like they have fairly the same results, what is the difference between the three methods above? which one is the best for production? I'm sorry, I'm new here in the production deployment API. I need some advice on this case. Thank you

This is my Cloud Run command

gcloud builds submit --tag gcr.io/gaguna3/priceengine

gcloud run deploy backend-pure-python \

--image="gcr.io/gaguna3/priceengine" \

--region asia-southeast2 \

--allow-unauthenticated \

--platform managed \

--memory 4Gi \

--cpu 2 \

--timeout 900 \

--project=gaguna3