I need to draw a two-dimensional grid of Squares with centered Text on them onto a (transparent) PNG file. The tiles need to have a sufficiently big resolution, so that the text does not get pixaleted to much.

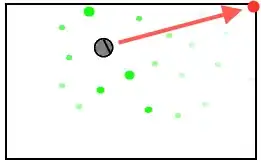

For testing purposes I create a 2048x2048px 32-bit (transparency) PNG Image with 128x128px tiles like for example that one:

The problem is I need to do this with reasonable performance. All methods I have tried so far took more than 100ms to complete, while I would need this to be at a max < 10ms. Apart from that I would need the program generating these images to be Cross-Platform and support WebAssembly (but even if you have for example an idea how to do this using posix threads, etc. I would gladly take that as a starting point, too).

Net5 Implementation

using System.Diagnostics;

using System;

using System.Drawing;

namespace ImageGeneratorBenchmark

{

class Program

{

static int rowColCount = 16;

static int tileSize = 128;

static void Main(string[] args)

{

var watch = Stopwatch.StartNew();

Bitmap bitmap = new Bitmap(rowColCount * tileSize, rowColCount * tileSize);

Graphics graphics = Graphics.FromImage(bitmap);

Brush[] usedBrushes = { Brushes.Blue, Brushes.Red, Brushes.Green, Brushes.Orange, Brushes.Yellow };

int totalCount = rowColCount * rowColCount;

Random random = new Random();

StringFormat format = new StringFormat();

format.LineAlignment = StringAlignment.Center;

format.Alignment = StringAlignment.Center;

for (int i = 0; i < totalCount; i++)

{

int x = i % rowColCount * tileSize;

int y = i / rowColCount * tileSize;

graphics.FillRectangle(usedBrushes[random.Next(0, usedBrushes.Length)], x, y, tileSize, tileSize);

graphics.DrawString(i.ToString(), SystemFonts.DefaultFont, Brushes.Black, x + tileSize / 2, y + tileSize / 2, format);

}

bitmap.Save("Test.png");

watch.Stop();

Console.WriteLine($"Output took {watch.ElapsedMilliseconds} ms.");

}

}

}

This takes around 115ms on my machine. I am using the System.Drawing.Common nuget here.

Saving the bitmap takes roughly 55ms and drawing to the graphics object in the loop also takes roughly 60ms, while 40ms can be attributed to drawing the text.

Rust Implementation

use std::path::Path;

use std::time::Instant;

use image::{Rgba, RgbaImage};

use imageproc::{drawing::{draw_text_mut, draw_filled_rect_mut, text_size}, rect::Rect};

use rusttype::{Font, Scale};

use rand::Rng;

#[derive(Default)]

struct TextureAtlas {

segment_size: u16, // The side length of the tile

row_col_count: u8, // The amount of tiles in horizontal and vertical direction

current_segment: u32 // Points to the next segment, that will be used

}

fn main() {

let before = Instant::now();

let mut atlas = TextureAtlas {

segment_size: 128,

row_col_count: 16,

..Default::default()

};

let path = Path::new("test.png");

let colors = vec![Rgba([132u8, 132u8, 132u8, 255u8]), Rgba([132u8, 255u8, 32u8, 120u8]), Rgba([200u8, 255u8, 132u8, 255u8]), Rgba([255u8, 0u8, 0u8, 255u8])];

let mut image = RgbaImage::new(2048, 2048);

let font = Vec::from(include_bytes!("../assets/DejaVuSans.ttf") as &[u8]);

let font = Font::try_from_vec(font).unwrap();

let font_size = 40.0;

let scale = Scale {

x: font_size,

y: font_size,

};

// Draw random color rects for benchmarking

for i in 0..256 {

let rand_num = rand::thread_rng().gen_range(0..colors.len());

draw_filled_rect_mut(

&mut image,

Rect::at((atlas.current_segment as i32 % atlas.row_col_count as i32) * atlas.segment_size as i32, (atlas.current_segment as i32 / atlas.row_col_count as i32) * atlas.segment_size as i32)

.of_size(atlas.segment_size.into(), atlas.segment_size.into()),

colors[rand_num]);

let number = i.to_string();

//let text = &number[..];

let text = number.as_str(); // Somehow this conversion takes ~15ms here for 255 iterations, whereas it should normally only be less than 1us

let (w, h) = text_size(scale, &font, text);

draw_text_mut(

&mut image,

Rgba([0u8, 0u8, 0u8, 255u8]),

(atlas.current_segment % atlas.row_col_count as u32) * atlas.segment_size as u32 + atlas.segment_size as u32 / 2 - w as u32 / 2,

(atlas.current_segment / atlas.row_col_count as u32) * atlas.segment_size as u32 + atlas.segment_size as u32 / 2 - h as u32 / 2,

scale,

&font,

text);

atlas.current_segment += 1;

}

image.save(path).unwrap();

println!("Output took {:?}", before.elapsed());

}

For Rust I was using the imageproc crate. Previously I used the piet-common crate, but the output took more than 300ms. With the imageproc crate I got around 110ms in release mode, which is on par with the C# version, but I think it will perform better with webassembly.

When I used a static string instead of converting the number from the loop (see comment) I got below 100ms execution time. For Rust drawing to the image only takes around 30ms, but saving it takes 80ms.

C++ Implementation

#include <iostream>

#include <cstdlib>

#define cimg_display 0

#define cimg_use_png

#include "CImg.h"

#include <chrono>

#include <string>

using namespace cimg_library;

using namespace std;

/* Generate random numbers in an inclusive range. */

int random(int min, int max)

{

static bool first = true;

if (first)

{

srand(time(NULL));

first = false;

}

return min + rand() % ((max + 1) - min);

}

int main() {

auto t1 = std::chrono::high_resolution_clock::now();

static int tile_size = 128;

static int row_col_count = 16;

// Create 2048x2048px image.

CImg<unsigned char> image(tile_size*row_col_count, tile_size*row_col_count, 1, 3);

// Make some colours.

unsigned char cyan[] = { 0, 255, 255 };

unsigned char black[] = { 0, 0, 0 };

unsigned char yellow[] = { 255, 255, 0 };

unsigned char red[] = { 255, 0, 0 };

unsigned char green[] = { 0, 255, 0 };

unsigned char orange[] = { 255, 165, 0 };

unsigned char colors [] = { // This is terrible, but I don't now C++ very well.

cyan[0], cyan[1], cyan[2],

yellow[0], yellow[1], yellow[2],

red[0], red[1], red[2],

green[0], green[1], green[2],

orange[0], orange[1], orange[2],

};

int total_count = row_col_count * row_col_count;

for (size_t i = 0; i < total_count; i++)

{

int x = i % row_col_count * tile_size;

int y = i / row_col_count * tile_size;

int random_color_index = random(0, 4);

unsigned char current_color [] = { colors[random_color_index * 3], colors[random_color_index * 3 + 1], colors[random_color_index * 3 + 2] };

image.draw_rectangle(x, y, x + tile_size, y + tile_size, current_color, 1.0); // Force use of transparency. -> Does not work. Always outputs 24bit PNGs.

auto s = std::to_string(i);

CImg<unsigned char> imgtext;

unsigned char color = 1;

imgtext.draw_text(0, 0, s.c_str(), &color, 0, 1, 40); // Measure the text by drawing to an empty instance, so that the bounding box will be set automatically.

image.draw_text(x + tile_size / 2 - imgtext.width() / 2, y + tile_size / 2 - imgtext.height() / 2, s.c_str(), black, 0, 1, 40);

}

// Save result image as PNG (libpng and GraphicsMagick are required).

image.save_png("Test.png");

auto t2 = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1).count();

std::cout << "Output took " << duration << "ms.";

getchar();

}

I also reimplemented the same program in C++ using CImg. For .png output libpng and GraphicsMagick are required, too. I am not very fluent in C++ and I did not even bother optimizing, because the save operation took ~200ms in Release mode, whereas the whole Image generation which is currently very unoptimized took only 30ms. So this solution also falls way short of my goal.

Where I am right now

A graph of where I am right now. I will update this when I make some progress.

Why I am trying to do this and why it bothers me so much

I was asked in the comments to give a bit more context. I know this question is getting a big bloated, but if you are interested read on...

So basically I need to build a Texture Atlas for a .gltf file. I need to generate a .gltf file from data and the primitives in the .gltf file will be assigned a texture based on the input data, too. In order to optimize for a small amount of draw calls I am putting as much geometry as possible into one single primitive and then use texture coordinates to map the texture to the model. Now GPUs have a maximum size, that the texture can have. I will use 2048x2048 pixels, because the majority of devices supports at least that. That means, that if I have more than 256 objects, I need to add a new primitive to the .gltf and generate another texture atlas. In some cases one texture atlas might be sufficient, in other cases I need up to 15-20.

The textures will have a (semi-)transparent background, maybe text and maybe some lines / hatches or simple symbols, that can be drawn with a path.

I have the whole system set up in Rust already and the .gltf generating is really efficient: I can generate 54000 vertecies (=1500 boxes for example) in about 10ms which is a common case. Now for this I need to generate 6 texture atlases, which is not really a problem on a multi-core system (7 threads one for the .gltf, six for the textures). The problem is generating one takes about 100ms (or now 55 ms) which makes the whole process more than 5 times slower.

Unfortunatly it gets even worse, because another common case is 15000 objects. Generating the vertecies (plus a lot of custom attributes actually) and assembling the .gltf still only takes 96ms (540000 Vertecies / 20MB .gltf), but in that time I need to generate 59 texture atlases. I am working on a 8-core System, so at that point it gets impossible for me to run them all in parallel and I will have to generate ~9 atlases per thread (which means 55ms*9 = 495ms) so again this is 5 times as much and actually creates a quite noticeable lag. In reality it currently takes more than 2.5 s, because I am have updated to use the faster code and there seems to be additional slowdown.

What I need to do

I do understand that it will take some time to write out 4194304 32-bit pixels. But as far as I can see, because I am only writing to different parts of the image (for example only to the upper tile and so on) it should be possible to build a program that does this using multiple threads. That is what I would like to try and I would take any hint on how to make my Rust program run faster.

If it helps I would also be willing to rewrite this in C or any other language, that can be compiled to wasm and can be called via Rust's FFI. So if you have suggestions for more performant libraries I would be very thankful for that too.

Edit

Update 1: I made all the suggested improvements for the C# version from the comments. Thanks for all of them. It is now at 115ms and almost exactly as fast as the Rust version, which makes me believe I am sort of hitting a dead-end there and I would really need to find a way to parallize this in order to make significant further improvements...

Update 2: Thanks to @pinkfloydx33 I was able to run the binary with around 60ms (including the first run) after publishing it with dotnet publish -p:PublishReadyToRun=true --runtime win10-x64 --configuration Release.

In the meantime I also tried other methods myself, namely Python with Pillow (~400ms), C# and Rust both with Skia (~314ms and ~260ms) and I also reimplemented the program in C++ using CImg (and libpng as well as GraphicsMagick).