and get image urls

from urllib.request import urlopen

from bs4 import BeautifulSoup

import json

AMEXurl = ['https://www.americanexpress.com/in/credit-cards/all-cards/?sourcecode=A0000FCRAA&cpid=100370494&dsparms=dc_pcrid_408453063287_kword_american%20express%20credit%20card_match_e&gclid=Cj0KCQiApY6BBhCsARIsAOI_GjaRsrXTdkvQeJWvKzFy_9BhDeBe2L2N668733FSHTHm96wrPGxkv7YaAl6qEALw_wcB&gclsrc=aw.ds']

identity = ['filmstrip_container']

html_1 = urlopen(AMEXurl[0])

soup_1 = BeautifulSoup(html_1,'lxml')

address = soup_1.find('div',attrs={"class" : identity[0]})

for x in address.find_all('div', class_ = 'filmstrip-imgContainer'):

print(x.find('div').get('img'))

but i am getting output as the following :

None

None

None

None

None

None

None

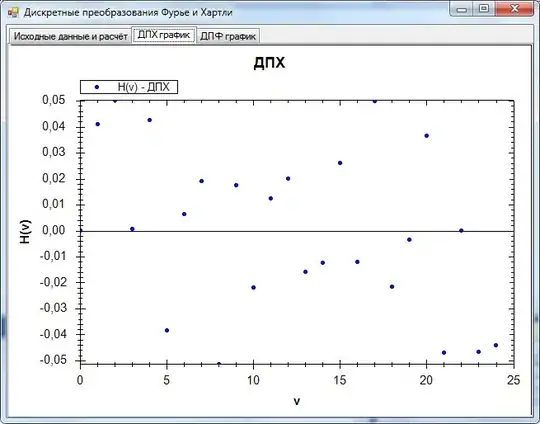

The follwing is the image of the html code from where I am trying to get the image urls :

This is the section of page from where I'd like to get the urls

I'd like to get to know if there are any changes to be made in the code so that I get all the image urls.