I'm trying to feed multiple inputs to a single input layer. To be precise, it is an atomic potential. So, the NN should predict the energy of a molecule based on atomic coordinates (atomic descriptors).

Let's take H2O. So, it has two H atoms and one O. Here is how NN should be organized: separate input for coordinates of each chemical element (that is, one for H and one for O). Then these two separate graphs result in their own local energies. The output layer is the sum of these energies and should match the target total energy. That is, that would be easy for just OH molecule: two parallel graphs for H and for O, each result in its own atomic energy, then they concatenate and sum to match a target total energy.

However, in case of H2 (or H2O), I have two H atoms. So, I need to feed one H atom to H-graph, then feed the second H atom to the same graph, sum them, and then send them to output layer that should match the target total energy.

Is there any way to perform this in Keras?

My code which is not capable of taking two H:

# graph for H

input1 = keras.Input(shape=(n_cols,), name="H_element")

l1_1 = Dense(8, activation='relu')(input1)

l1_2 = Dense(1, activation='linear',name = 'H_atomic_energy')(l1_1) #atomic energy for H

# graph for O

input2 = keras.Input(shape=(n_cols,), name="O_element")

l2_1 = Dense(8, activation='relu')(input2)

l2_2 = Dense(1, activation='linear', name = 'O_atomic_energy')(l2_1) #atomic energy for O

# summation and output. Total energy is the target

x = layers.concatenate([l1_2, l2_2])

ReduceSum = Lambda(lambda z: K.sum(z, axis=1, keepdims=True))

output = ReduceSum(x)

model = keras.Model(

inputs=[input1, input2],

outputs=output,)

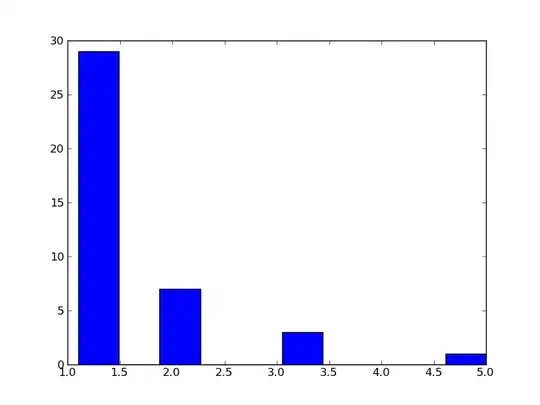

Visual description