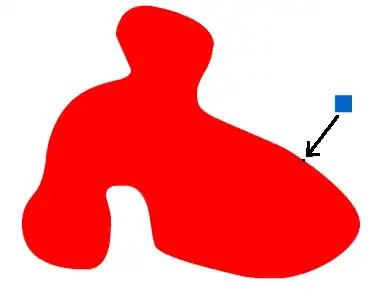

Though pandas has nice syntax for aggregating with dicts and NamedAggs, these can come at a huge efficiency cost. The reason is because instead of using the built-in groupby methods, which are optimized and/or implemented in cython, any .agg(lambda x: ...) or .apply(lambda x: ...) is going to take a much slower path.

What this means is that you should stick with the built-ins you can reference directly or by alias. Only as a last resort should you try to use a lambda:

In this particular case use

df.groupby('numbers')[['colors']].agg('nunique', dropna=False)

Avoid

df.groupby('numbers').agg({'colors': lambda x: x.nunique(dropna=False)})

This example shows that that while equivalent in output, and a seemingly minor change, there are enormous consequences in terms of performance, especially as the number of groups becomes large.

import perfplot

import pandas as pd

import numpy as np

def built_in(df):

return df.groupby('numbers')[['colors']].agg('nunique', dropna=False)

def apply(df):

return df.groupby('numbers').agg({'colors': lambda x: x.nunique(dropna=False)})

perfplot.show(

setup=lambda n: pd.DataFrame({'numbers': np.random.randint(0, n//10+1, n),

'colors': np.random.choice([np.NaN] + [*range(100)])}),

kernels=[

lambda df: built_in(df),

lambda df: apply(df)],

labels=['Built-In', 'Apply'],

n_range=[2 ** k for k in range(1, 20)],

equality_check=np.allclose,

xlabel='~N Groups'

)

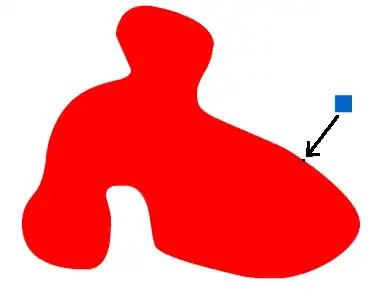

But you want to do multiple aggregations and use different columns

The .groupby() part of a groupby doesn't really do that much; it simply ensures the mapping is correct. So though unintuitive, it is still much faster to aggregate with the built-in separately and concatenate the results in the end than it is to agg with a simpler dict using a lambda.

Here is an example also wanting to sum the weight column, and we can see that splitting is still a lot faster, despite needing to join manually

def built_in(df):

return pd.concat([df.groupby('numbers')[['colors']].agg('nunique', dropna=False),

df.groupby('numbers')[['weight']].sum()], axis=1)

def apply(df):

return df.groupby('numbers').agg({'colors': lambda x: x.nunique(dropna=False),

'weight': 'sum'})

perfplot.show(

setup=lambda n: pd.DataFrame({'numbers': np.random.randint(0, n//10+1, n),

'colors': np.random.choice([np.NaN] + [*range(100)]),

'weight': np.random.normal(0,1,n)}),

kernels=[

lambda df: built_in(df),

lambda df: apply(df)],

labels=['Built-In', 'Apply'],

n_range=[2 ** k for k in range(1, 20)],

equality_check=np.allclose,

xlabel='~N Groups'

)