I'm trying to implement the Multiclass Hybrid loss function in Python from following article https://arxiv.org/pdf/1808.05238.pdf for my semantic segmentation problem using an imbalanced dataset. I managed to get my implementation correct enough to start while training the model, but the results are very poor. Model architecture - U-net, learning rate in Adam optimizer is 1e-5. Mask shape is (None, 512, 512, 3), with 3 classes (in my case forest, deforestation, other). The formula I used to implement my loss:

The code I created:

def build_hybrid_loss(_lambda_=1, _alpha_=0.5, _beta_=0.5, smooth=1e-6):

def hybrid_loss(y_true, y_pred):

C = 3

tversky = 0

# Calculate Tversky Loss

for index in range(C):

inputs_fl = tf.nest.flatten(y_pred[..., index])

targets_fl = tf.nest.flatten(y_true[..., index])

#True Positives, False Positives & False Negatives

TP = tf.reduce_sum(tf.math.multiply(inputs_fl, targets_fl))

FP = tf.reduce_sum(tf.math.multiply(inputs_fl, 1-targets_fl[0]))

FN = tf.reduce_sum(tf.math.multiply(1-inputs_fl[0], targets_fl))

tversky_i = (TP + smooth) / (TP + _alpha_ * FP + _beta_ * FN + smooth)

tversky += tversky_i

tversky += C

# Calculate Focal loss

loss_focal = 0

for index in range(C):

f_loss = - (y_true[..., index] * (1 - y_pred[..., index])**2 * tf.math.log(y_pred[..., index]))

# Average over each data point/image in batch

axis_to_reduce = range(1, 3)

f_loss = tf.math.reduce_mean(f_loss, axis=axis_to_reduce)

loss_focal += f_loss

result = tversky + _lambda_ * loss_focal

return result

return hybrid_loss

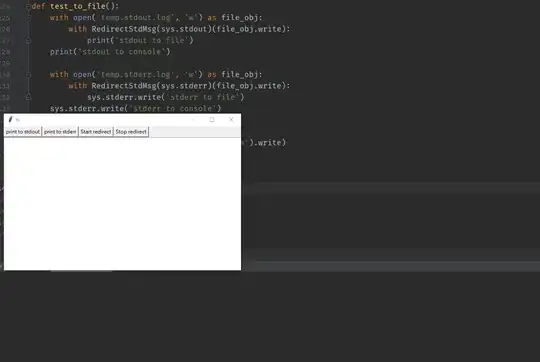

The prediction of the model after the end of an epoch (I have a problem with swapped colors, so the red in the prediction is actually green, which means forest, so the prediction is mostly forest and not deforestation):

The question is what is wrong with my hybrid loss implementation, what needs to be changed to make it work?