I am very new to shapley python package. And I am wondering how should I interpret the shapley value for the Binary Classification problem? Here is what I did so far. Firstly, I used a lightGBM model to fit my data. Something like

import shap

import lightgbm as lgb

params = {'object':'binary,

...}

gbm = lgb.train(params, lgb_train, num_boost_round=300)

e = shap.TreeExplainer(gbm)

shap_values = e.shap_values(X)

shap.summary_plot(shap_values[0][:, interested_feature], X[interested_feature])

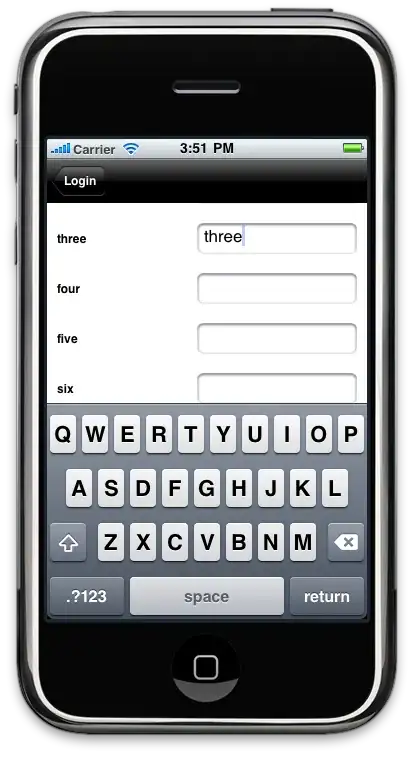

Since it is a binary classification problem. The shap_values contains two parts. I assume one is for class 0 and the other is class 1. If I want to know one feature's contribution. I have to plot two figures like the following.

For class 0

But how should I have a better visualization? The results cannot help me to understand "does the cold_days increase the probability of the output to become class 1 or become class 0?"

With the same dataset, if I am using the ANN, the output is something like that. I think that shapley result clearly tells me that 'the cold_days' will positively increase the probability of the outcome to become class 1.

I am feeling there is something wrong with the LightGBM output but I am not sure how to fix it. How can I get a clearer visualization similar to the ANN model?

#Edit

I suspect I mistakenly used lightGBM somehow to get the strange result. Here is the original code

import lightgbm as lgb

import shap

lgb_train = lgb.Dataset(x_train, y_train, free_raw_data=False)

lgb_eval = lgb.Dataset(x_val, y_val, free_raw_data=False)

params = {

'boosting_type': 'gbdt',

'objective': 'binary',

'metric': 'binary_logloss',

'num_leaves': 70,

'learning_rate': 0.005,

'feature_fraction': 0.7,

'bagging_fraction': 0.7,

'bagging_freq': 10,

'verbose': 0,

'min_data_in_leaf': 30,

'max_bin': 128,

'max_depth': 12,

'early_stopping_round': 20,

'min_split_gain': 0.096,

'min_child_weight': 6,

}

gbm = lgb.train(params,

lgb_train,

num_boost_round=300,

valid_sets=lgb_eval,

)

e = shap.TreeExplainer(gbm)

shap_values = e.shap_values(X)

shap.summary_plot(shap_values[0][:, interested_feature], X[interested_feature])