I am pretty new to signal and image processing. I attached a picture of what I am supposed to do from a paper (https://iopscience.iop.org/article/10.1088/1361-6501/ab7f79/meta).

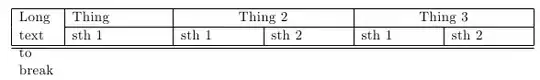

Basically, an interferogram is recorded by a CMOS sensor in B/W, then each vertical and horizontal pixel line is taken singularly and "associated" with a signal representing the intensity of light reaching the sensor. Then, the signals are Fourier-transformed to extract information about frequency and phase (to unwrap using). I have understood the final passage for the DFT of the signal, but I am stuck when I have to take the pixel line and the signal associated with it. Ideally, in Matlab the workflow would be:

- extract each pixel line

- assign a "colormap" to the line (white = 1, black = 0, all the other shades in between?)

- build my signal interpolating the values of the pixels

- DFT the signal to extract frequency and phase

Is there a compact way to do so?

So far I managed to do this:

I imported the image of the interferogram (248x320 pixels, just a snapshot from the paper) and for the 124th horizontal line I obtained the signal, frequency and phase.

I imported the image of the interferogram (248x320 pixels, just a snapshot from the paper) and for the 124th horizontal line I obtained the signal, frequency and phase.