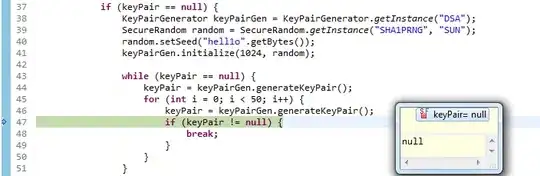

I have been trying to replicate https://diffeqflux.sciml.ai/dev/examples/BayesianNODE_NUTS/, using different ODE equation, but I have received this result without uncertainty quantification, is it because I did the initial value u0 is higher :

Could you please tell me what was wrong?

using DiffEqFlux, OrdinaryDiffEq, Flux, Optim, Plots, AdvancedHMC, MCMCChains

using JLD, StatsPlots

function Arps!(du,u,p,t)

y= u[1]

#x, y = u

# Di,b,n,tau = p

n,tau = p

#du[1]=dx=-(x * Di * x^b)

du[1]=dy=-(n *((t^n)/tau) * y/t)

end

tspan=(1.0,50.0)

tsteps = 1:1:50

u0 = [16382.9]

p=[0.48,15.92]

prob_trueode = ODEProblem(Arps!,u0,tspan,p)

ode_data = Array(solve(prob_trueode, Tsit5(), saveat = tsteps))

ode_data =ode_data[1,:]

dudt= FastChain(FastDense(1, 30, tanh),

FastDense(30, 1))

prob_neuralode = NeuralODE(dudt, tspan, Tsit5(), saveat = tsteps)

function predict_neuralode(p)

Array(prob_neuralode(u0, p))

end

function loss_neuralode(p)

pred = predict_neuralode(p)

loss = sum(abs2, ode_data .- pred)

return loss, pred

end

l(θ) = -sum(abs2, ode_data .- predict_neuralode(θ)) - sum(θ .* θ)

function dldθ(θ)

x,lambda = Flux.Zygote.pullback(l,θ)

grad = first(lambda(1))

return x, grad

end

metric = DiagEuclideanMetric(length(prob_neuralode.p))

h = Hamiltonian(metric, l, dldθ)

integrator = Leapfrog(find_good_stepsize(h, Float64.(prob_neuralode.p)))

prop = AdvancedHMC.NUTS{MultinomialTS, GeneralisedNoUTurn}(integrator)

adaptor = StanHMCAdaptor(MassMatrixAdaptor(metric), StepSizeAdaptor(0.45, prop.integrator))

samples, stats = sample(h, prop, Float64.(prob_neuralode.p), 500, adaptor, 500; progress=true)

losses = map(x-> x[1],[loss_neuralode(samples[i]) for i in 1:length(samples)])

################### RETRODICTED PLOTS: TIME SERIES #################

pl = scatter(tsteps, ode_data, color = :red, label = "Data: Var1", xlabel = "t", title = "Spiral Neural ODE")

for k in 1:300

resol = predict_neuralode(samples[100:end][rand(1:400)])

plot!(tsteps,resol[1,:], alpha=0.04, color = :red, label = "")

end

idx = findmin(losses)[2]

prediction = predict_neuralode(samples[idx])

plot!(tsteps,prediction[1,:], color = :black, w = 2, label = "")