I replicated the Example 2 mentioned in the Igor Ostrovsky's blog "Gallery of Processor Cache Effects" using a minimal reproducible example (MRE) as to measure the runtime for each K. The K in the blog is same as the variable STEP in the following MRE:

import java.io.FileWriter;

import java.io.IOException;

import java.util.Map;

import java.util.TreeMap;

public class DemonstrateCachingAndDataPrefetching {

public static void main(String[] args) throws IOException {

//Fetching environment from command line arguments.

String environment = args[0];

//Integer array of predefined size (chosen randomly) and acts as the prime data for the experiment.

int[] arr = new int[64 * 1024 * 1024];

//Domain for the values of STEP

int[] STEP_DOMAIN = new int[]{1, 2, 4, 8, 16, 32, 64, 128, 256, 512, 1024, 2048, 4096, 8096};

//Map to store the cumulative runtime for each value of STEP.

TreeMap<Integer, Long> map = new TreeMap<Integer, Long>();

//Number of times the runtime is computed for each value of STEP and then is averaged out.

int RUNS = 1000;

for (int STEP : STEP_DOMAIN){

//Initializing the cumulative time for each value of STEP to 0.

map.put(STEP, (long)0);

for(int run = 0; run < RUNS; run++){

long startTime = System.nanoTime();

//Generalized Task

for (int i = 0; i < arr.length; i += STEP){

arr[i] *= 3;

}

long estimatedTime = System.nanoTime() - startTime;

long cumulativeTimeForStep = map.get(STEP);

cumulativeTimeForStep += estimatedTime;

map.put(STEP, cumulativeTimeForStep);

}

}

FileWriter myWriter = new FileWriter(String.format("results-%1$s.csv", environment));

myWriter.write("STEP,RUNTIME\n");

for(Map.Entry m:map.entrySet()){

Double averageValue = Double.parseDouble(m.getValue()+"");

averageValue /= (double)RUNS;

myWriter.write(m.getKey() + "," + String.format("%.02f", averageValue) + "\n");

}

myWriter.close();

}

}

I checked the cacheline size of the Apple M1 CPU using sysctl. It returned 128 Bytes, as shown in the below picture:

QUESTION:

As, the cacheline size is 128 Bytes and size of integer in JAVA is 4 Bytes, the runtime should have been nearly same for the values of the variable STEP = {4, 8, 16, 32} and 64 if data perfetching is supported.

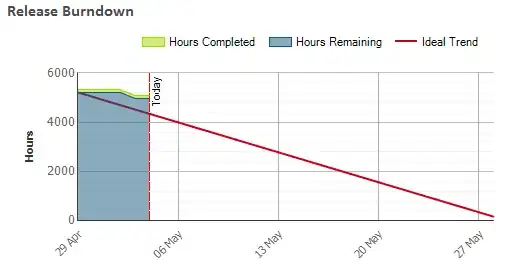

But, the runtime value for STEP = 32 was significantly greater than the runtime for the values of variable STEP = {8, 16, 64}, as shown in the following image:

Had it been an Intel CPU, the runtime for the values of the variable STEP = {8, 16, 32, 64} would have been nearly same (as cacheline size is 128 Bytes). But, this is not the case with the Apple M1 CPU.

Any hint about this inconsistency is appreciated.