I have weekly based trade export time-series data that I need to make a stacked bar plot for visualizing trade activity. To do so, I aggregated my data for sum-up of each columns for all rows, then use nlargest() to select top n columns. However, doing this way might not be quite accurate because I made stacked plot for different years in the loop and top n columns for each year can be different. But what I did, take the total sum of each column for all rows (a.k.a, including all years) then select top n columns, which is biased. So, I am looking at the different way of doing this, perhaps, I might group the time series data by each year then make the stacked plot. Is there other way around selecting top n columns from time-series data instead of using nlargest? Does anyone know any possible way of doing this? What other way we could select top n columns from time-series data? Any idea?

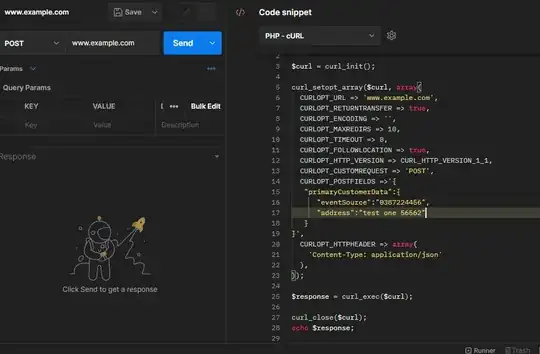

my current attempt:

this is my current attempt to manipulate time series data, where I aggregate each columns for all rows then select top n columns using nlargest():

import pandas as pd

# load the data

url = 'https://gist.githubusercontent.com/adamFlyn/a6048e547b5a963c7af356c964d15af6/raw/c57c7915cf14f81edc9d5eadaf14efbd43d3e58a/trade_df.csv'

df_ = pd.read_csv(url, parse_dates=['weekly'])

df_.set_index('weekly', inplace=True)

df_.loc['Total',:]= df_.sum(axis=0)

df1 = df_.T

df1 =df1.nlargest(6, columns=['Total'])

df1.drop('Total', axis=1, inplace=True)

df2 = df1.T

df2.reset_index(inplace=True)

df2['weekly'] = pd.to_datetime(df2['weekly'])

df2['year'] = df2['weekly'].dt.year

df2['week'] = df2['weekly'].dt.strftime('%W').astype('int')

then I visualize the plotting data with matplotlib as follow:

import matplotlib.pyplot as plt

plt_df = df2.set_index(['year','week'])

plt_df.drop("weekly", axis=1, inplace=True)

for n, g in plt_df.groupby(level=0):

ax = g.loc[n].plot.bar(stacked=True, title=f'{n} Year', figsize=(8,5))

plt.show()

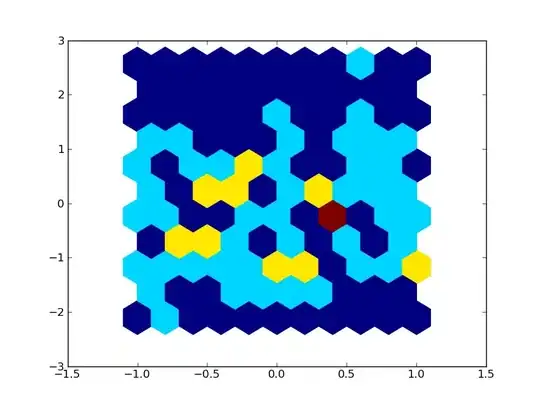

although the output of current approach in stacked plot is fine, but selecting top n columns using nlargest() is not quite accurate.for example, in 2019 USDA report, China wasn't top trade partner of US, but in late 2020, China was getting more products from US, and if I use nlargest() to select top column (or trade partners), it is going to be problematic and China won't be in list and not in the plot.

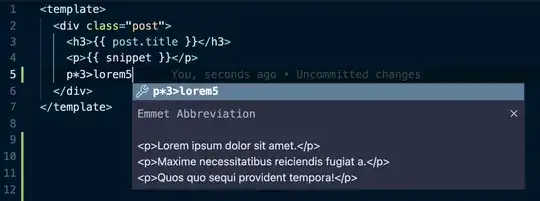

update

As @Vaishali suggested in the comment with this post, using head() might be good idea to extract top columns, so I tried like this:

for n, g in plt_df.groupby(level=0):

for i in g:

gg = g[i].sort_values(g[i].values,ascending = False).groupby('week').head(5)

ax = gg.loc[n].plot.bar(stacked=True, title=f'{n} Year', figsize=(8,5))

but this is not working. Can anyone point me out how to select top n columns from time series data? any idea?