Neither too high nor too low learning rate should be considered for training a NN. A large learning rate can miss the global minimum and in extreme cases can cause the model to diverge completely from the optimal solution. On the other hand, a small learning rate can stuck to a local minimum.

ReduceLROnPlateau purpose is to track your model's performance and reduce the learning rate when there is no improvement for x number of epochs. The intuition is that the model approached a sub-optimal solution with current learning rate and oscillate around the global minimum. Reducing the learning rate would enable the model to take smaller learning steps to the optimal solution of the cost function.

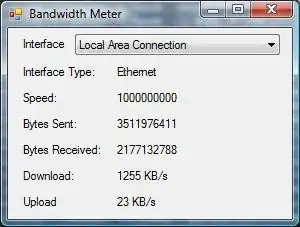

Image source

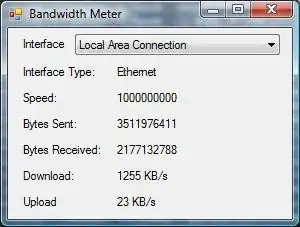

Image source