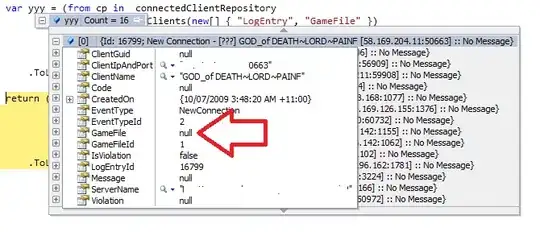

I want to train an object detection model to detect chickens that are only upon that water reservoir (like the one on the picture), thus I'm currently annotating chickens in images. Since I don't want to detect any chickens on the field, I'm not annotating them. But I don't want to confuse my model by entering non annotated chickens when training it. Is there an problem to just add a cover(that will be black) like the one in the second image to all training and testing images?

Edit: I don't want to annotate chickens on the ground because each annotation costs me money. This is why I'm thinking on add this cover.