![enter image description here][2]I have a data set, which contains the lists of the 8 words(e.g. "klein", "warm"), occurring in a random pattern. I need to create a variable which shows me how many trials were in between the particular occurrence of the word and its last occurrence. for example, if the word showed up twice in a row, this new variable should be 0, if there was another word in between, the variable would be 1 and so on. can anyone help me and give me a hint?

thank you in advance!

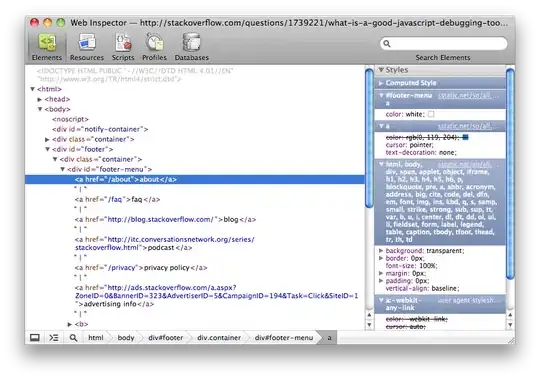

P.s. you can see the picture is how it is done in SPSS

![enter image description here][2]I have a data set, which contains the lists of the 8 words(e.g. "klein", "warm"), occurring in a random pattern. I need to create a variable which shows me how many trials were in between the particular occurrence of the word and its last occurrence. for example, if the word showed up twice in a row, this new variable should be 0, if there was another word in between, the variable would be 1 and so on. can anyone help me and give me a hint?

thank you in advance!

P.s. you can see the picture is how it is done in SPSS

P.s. dput()

structure(list(ExperimentName = c("Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment",

"Habit_Experiment", "Habit_Experiment", "Habit_Experiment", "Habit_Experiment"

), Subject = c(1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L,

1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L,

1L, 1L), AccP = c(90.38461, 90.38461, 90.38461, 90.38461, 90.38461,

90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461,

90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461,

90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461, 90.38461,

90.38461, 90.38461, 90.38461, 90.38461), Age = c(28L, 28L, 28L,

28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L,

28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L, 28L,

28L), Handedness = structure(c(2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L,

2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L, 2L,

2L, 2L, 2L, 2L, 2L, 2L), .Label = c("links", "rechts"), class = "factor"),

PracFail.RT = c(0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L,

0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L, 0L,

0L, 0L, 0L, 0L), Sex = structure(c(3L, 3L, 3L, 3L, 3L, 3L,

3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L,

3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L), .Label = c("divers",

"männlich", "weiblich"), class = "factor"), Block = 1:30,

Colour = c("YELLOW", "RED", "YELLOW", "YELLOW", "YELLOW",

"RED", "YELLOW", "YELLOW", "YELLOW", "YELLOW", "RED", "RED",

"RED", "RED", "YELLOW", "RED", "RED", "YELLOW", "RED", "RED",

"YELLOW", "RED", "YELLOW", "RED", "RED", "YELLOW", "DODGERBLUE",

"LIME", "DODGERBLUE", "LIME"), contingency.RESP = c("", "",

"", "", "", "", "", "", "", "", "", "", "", "", "", "", "",

"", "", "", "", "", "", "", "", "", "", "", "", ""), contWord = c("",

"", "", "", "", "", "", "", "", "", "", "", "", "", "", "",

"", "", "", "", "", "", "", "", "", "", "", "", "", ""),

Correct = c("l", "d", "l", "l", "l", "d", "l", "l", "l",

"l", "d", "d", "d", "d", "l", "d", "d", "l", "d", "d", "l",

"d", "l", "d", "d", "l", "l", "d", "l", "d"), Data = 1:30,

Data.Sample = 1:30, Rare_C = c("", "", "True", "False", "False",

"False", "True", "False", "False", "False", "False", "False",

"False", "True", "True", "True", "True", "False", "True",

"False", "False", "False", "False", "False", "False", "True",

"", "", "True", "False"), Stim.ACC = c(0L, 1L, 0L, 1L, 1L,

1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L,

0L, 1L, 1L, 1L, 1L, 1L, 0L, 1L, 1L, 1L), Stim.CRESP = c("l",

"d", "l", "l", "l", "d", "l", "l", "l", "l", "d", "d", "d",

"d", "l", "d", "d", "l", "d", "d", "l", "d", "l", "d", "d",

"l", "l", "d", "l", "d"), Stim.RESP = c("d", "d", "d", "l",

"l", "d", "l", "l", "l", "l", "d", "d", "d", "d", "l", "d",

"d", "l", "d", "d", "d", "d", "l", "d", "d", "l", "d", "d",

"l", "d"), Stim.RT = c(NA, 808L, NA, 691L, 462L, 884L, 443L,

466L, 444L, 385L, 474L, 441L, 399L, 347L, 398L, 418L, 383L,

451L, 304L, 389L, NA, 467L, 395L, 338L, 333L, 327L, NA, 562L,

460L, 374L), Word = c("XXXX", "XXXX", "warm", "leicht", "leicht",

"warm", "klein", "ganz", "leicht", "leicht", "klein", "klein",

"warm", "ganz", "warm", "leicht", "leicht", "ganz", "ganz",

"klein", "ganz", "warm", "ganz", "warm", "klein", "klein",

"XXXX", "XXXX", "weich", "klar")), row.names = c(NA, 30L), class = "data.frame")