I have a hyperbolic function and i need to find the 0 of it. I have tried various classical methods (bisection, newton and so on).

Second derivatives are continuous but not accessible analytically, so i have to exclude methods using them.

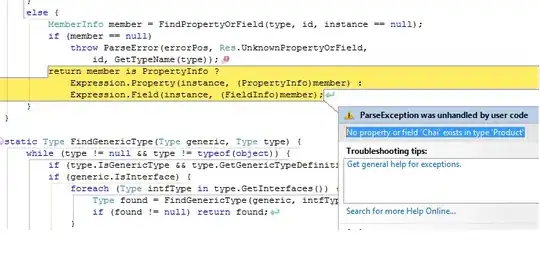

For the purpose of my application Newton method is the only one providing sufficient speed but it's relatively unstable if I'm not close enough to the actual zero. Here is a simple screenshot:

The zero is somewhere around 0.05. and since the function diverges at 0, if i take a initial guess value greater then the minimum location of a certain extent, then i obviously have problems with the asymptote.

Is there a more stable method in this case that would eventually offer speeds comparable to Newton?

I also thought of transforming the function in an equivalent better function with the same zero and only then applying Newton but I don't really know which transformations I can do.

Any help would be appreciated.