I have some code, largely taken from various sources linked at the bottom of this post, written in Python, that takes an image of shape [height, width] and some bounding boxes in the [x_min, y_min, x_max, y_max] format, both numpy arrays, and rotates an image and its bounding boxes counterclockwise. Since after rotation the bounding box becomes more of a "diamond shape", i.e. not axis aligned, then I perform some calculations to make it axis aligned. The purpose of this code is to perform data augmentation in training an object detection neural network through the use of rotated data (where flipping horizontally or vertically is common). It seems flips of other angles are common for image classification, without bounding boxes, but when there is boxes, the resources for how to flip the boxes as well as the images is relatively sparse/niche.

It seems when I input an angle of 45 degrees, that I get some less than "tight" bounding boxes, as in the four corners are not a very good annotation, whereas the original one was close to perfect.

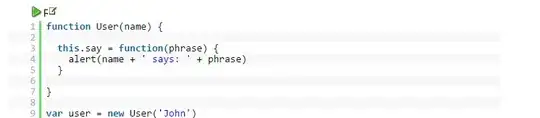

The image shown below is the first image in the MS COCO 2014 object detection dataset (training image), and its first bounding box annotation. My code is as follows:

import math

import cv2

import numpy as np

# angle assumed to be in degrees

# bbs a list of bounding boxes in x_min, y_min, x_max, y_max format

def rotateImageAndBoundingBoxes(im, bbs, angle):

h, w = im.shape[0], im.shape[1]

(cX, cY) = (w//2, h//2) # original image center

M = cv2.getRotationMatrix2D((cX, cY), angle, 1.0) # 2 by 3 rotation matrix

cos = np.abs(M[0, 0])

sin = np.abs(M[0, 1])

# compute the dimensions of the rotated image

nW = int((h * sin) + (w * cos))

nH = int((h * cos) + (w * sin))

# adjust the rotation matrix to take into account translation of the new centre

M[0, 2] += (nW / 2) - cX

M[1, 2] += (nH / 2) - cY

rotated_im = cv2.warpAffine(im, M, (nW, nH))

rotated_bbs = []

for bb in bbs:

# get the four rotated corners of the bounding box

vec1 = np.matmul(M, np.array([bb[0], bb[1], 1], dtype=np.float64)) # top left corner transformed

vec2 = np.matmul(M, np.array([bb[2], bb[1], 1], dtype=np.float64)) # top right corner transformed

vec3 = np.matmul(M, np.array([bb[0], bb[3], 1], dtype=np.float64)) # bottom left corner transformed

vec4 = np.matmul(M, np.array([bb[2], bb[3], 1], dtype=np.float64)) # bottom right corner transformed

x_vals = [vec1[0], vec2[0], vec3[0], vec4[0]]

y_vals = [vec1[1], vec2[1], vec3[1], vec4[1]]

x_min = math.ceil(np.min(x_vals))

x_max = math.floor(np.max(x_vals))

y_min = math.ceil(np.min(y_vals))

y_max = math.floor(np.max(y_vals))

bb = [x_min, y_min, x_max, y_max]

rotated_bbs.append(bb)

// my function to resize image and bbs to the original image size

rotated_im, rotated_bbs = resizeImageAndBoxes(rotated_im, w, h, rotated_bbs)

return rotated_im, rotated_bbs

The good bounding box looks like:

The not-so-good bounding box looks like :

I am trying to determine if this is an error of my code, or this is expected behavior? It seems like this problem is less apparent at integer multiples of pi/2 radians (90 degrees), but I would like to achieve tight bounding boxes at any angle of rotation. Any insights at all appreciated.

Sources: [Open CV2 documentation] https://docs.opencv.org/3.4/da/d54/group__imgproc__transform.html#gafbbc470ce83812914a70abfb604f4326

[Data Augmentation Discussion] https://blog.paperspace.com/data-augmentation-for-object-detection-rotation-and-shearing/

[Mathematics of rotation around an arbitrary point in 2 dimension] https://math.stackexchange.com/questions/2093314/rotation-matrix-of-rotation-around-a-point-other-than-the-origin