I've bench-marked the runtime of the two learners and also took two screenshots of the {htop} while {ranger} and {svm} was training to make my point more clearer. As stated in the title of this post, my question is the reason Why train/predict in svm is so slow compare to other learners (in this case ranger)? Is it related to the underlying structure of the learners? Or I am making a mistake in the code? Or...? Any help is appreciated.

htop when ranger is training; all threads are used.

htop when ranger is training; all threads are used.

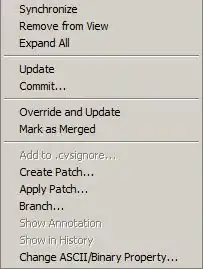

htop when svm is training; only 2 threads are used.

htop when svm is training; only 2 threads are used.

code:

library(mlr3verse)

library(future.apply)

#> Loading required package: future

library(future)

library(lgr)

library(data.table)

file <- 'https://drive.google.com/file/d/1Y3ao-SOFH/view?usp=sharing'

#you can download the file using this link.

destfile = '/../Downloads/sample.RData'

download.file(file, destfile)

# pre-processing

sample$clc3_red <- as.factor(sample$clc3_red)

sample$X <- NULL

#> Warning in set(x, j = name, value = value): Column 'X' does not exist to remove

sample$confidence <- NULL

sample$ecoregion_id <- NULL

sample$gsw_occurrence_1984_2019 <- NULL

tsk_clf <- mlr3::TaskClassif$new(id = 'sample', backend = sample, target = "clc3_red")

tsk_clf$col_roles$group = 'tile_id' #spatial CV

tsk_clf$col_roles$feature = setdiff(tsk_clf$col_roles$feature , 'tile_id')

tsk_clf$col_roles$feature = setdiff(tsk_clf$col_roles$feature , 'x')

tsk_clf$col_roles$feature = setdiff(tsk_clf$col_roles$feature , 'y')

# 2 learners for benchmarking

svm <- lrn("classif.svm", type = "C-classification", kernel = "radial", predict_type = "response")

ranger <- lrn("classif.ranger", predict_type = "response", importance = "permutation")

# ranger parallel

plan(multicore)

time <- Sys.time()

ranger$

train(tsk_clf)$

predict(tsk_clf)$

score()

#> Warning: Dropped unused factor level(s) in dependent variable: 333, 335, 521,

#> 522.

#> classif.ce

#> 0.0116

Sys.time() - time

#> Time difference of 20.12981 secs

# svm parallel

plan(multicore)

time <- Sys.time()

svm$

train(tsk_clf)$

predict(tsk_clf)$

score()

#> classif.ce

#> 0.4361

Sys.time() - time

#> Time difference of 55.13694 secs

Created on 2021-01-12 by the reprex package (v0.3.0)

sessionInfo()

#> R version 4.0.2 (2020-06-22)

#> Platform: x86_64-pc-linux-gnu (64-bit)

#> Running under: Ubuntu 20.04.1 LTS

#>

#> Matrix products: default

#> BLAS: /opt/microsoft/ropen/4.0.2/lib64/R/lib/libRblas.so

#> LAPACK: /opt/microsoft/ropen/4.0.2/lib64/R/lib/libRlapack.so

#>

#> locale:

#> [1] LC_CTYPE=en_US.UTF-8 LC_NUMERIC=C

#> [3] LC_TIME=en_GB.UTF-8 LC_COLLATE=en_US.UTF-8

#> [5] LC_MONETARY=en_GB.UTF-8 LC_MESSAGES=en_US.UTF-8

#> [7] LC_PAPER=en_GB.UTF-8 LC_NAME=C

#> [9] LC_ADDRESS=C LC_TELEPHONE=C

#> [11] LC_MEASUREMENT=en_GB.UTF-8 LC_IDENTIFICATION=C

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> other attached packages:

#> [1] data.table_1.12.8 lgr_0.3.4 future.apply_1.6.0

#> [4] future_1.18.0 mlr3verse_0.1.3 paradox_0.3.0

#> [7] mlr3viz_0.1.1 mlr3tuning_0.1.2 mlr3pipelines_0.1.3

#> [10] mlr3learners_0.2.0 mlr3filters_0.2.0 mlr3_0.3.0

#>

#> loaded via a namespace (and not attached):

#> [1] Rcpp_1.0.5 compiler_4.0.2 pillar_1.4.6 highr_0.8

#> [5] class_7.3-17 mlr3misc_0.3.0 tools_4.0.2 digest_0.6.25

#> [9] uuid_0.1-4 lattice_0.20-41 evaluate_0.14 lifecycle_0.2.0

#> [13] tibble_3.0.3 checkmate_2.0.0 gtable_0.3.0 pkgconfig_2.0.3

#> [17] rlang_0.4.7 Matrix_1.2-18 parallel_4.0.2 yaml_2.2.1

#> [21] xfun_0.15 e1071_1.7-3 ranger_0.12.1 withr_2.2.0

#> [25] stringr_1.4.0 knitr_1.29 globals_0.12.5 vctrs_0.3.2

#> [29] grid_4.0.2 glue_1.4.1 listenv_0.8.0 R6_2.4.1

#> [33] rmarkdown_2.3 ggplot2_3.3.2 magrittr_1.5 mlr3measures_0.2.0

#> [37] codetools_0.2-16 backports_1.1.8 scales_1.1.1 htmltools_0.5.0

#> [41] ellipsis_0.3.1 colorspace_1.4-1 stringi_1.4.6 munsell_0.5.0

#> [45] crayon_1.3.4