sampleDf = spark.createDataFrame([(1, 'A', 2021, 1, 5),(1, 'B', 2021, 1, 6),(1, 'C', 2021, 1, 7),],['msg_id', 'msg', 'year', 'month', 'day'])

sampleDf.show()

sampleDf.write.format("parquet").option("path", "/mnt/datalake/test").mode("append").partitionBy("msg_id","year", "month", "day").saveAsTable("test_rawais")

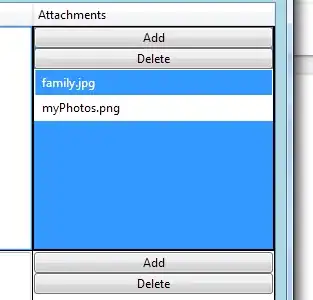

This results in the following table:

Now when I convert this parquet table to a delta table, I get the following error.

If I directly create a new delta table instead of a 'convert to delta', then it works.

Any inputs would help. Thanks.