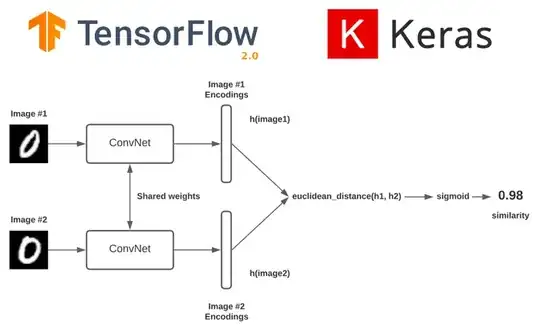

I am developing a Siamese Network for Face Recognition using Keras for 224x224x3 sized images. The architecture of a Siamese Network is like this:

For the CNN model, I am thinking of using the InceptionV3 model which is already pretrained in the Keras.applications module.

#Assume all the other modules are imported correctly

from keras.applications.inception_v3 import InceptionV3

IMG_SHAPE=(224,224,3)

def return_siamese_net():

left_input=Input(IMG_SHAPE)

right_input=Input(IMG_SHAPE)

model1=InceptionV3(include_top=False, weights="imagenet", input_tensor=left_input) #Left SubConvNet

model2=InceptionV3(include_top=False, weights="imagenet", input_tensor=right_input) #Right SubConvNet

#Do Something here

distance_layer = #Do Something

prediction = Dense(1,activation='sigmoid')(distance_layer) # Outputs 1 if the images match and 0 if it does not

siamese_net = #Do Something

return siamese_net

model=return_siamese_net()

I get error since the model is pretrained, and I am now stuck at implementing the Distance Layer for the Twin Network.

What should I add in between to make this Siamese Network work?