I am working on image registration of OCT data. I would like to locate the regions/area in my targeted registered image, where image registration has actually occurred from the source images. I am working in Python. Can anyone please tell me what are the available techniques?

Any suggestions on how to proceed with the problem are also welcomed. I have done some trial image registration on two images initially. The goal is to do registration of a large dataset.

My code is given below:

#importing libraries

import cv2

import numpy as np

# from skimage.measure import structural_similarity as ssim

# from skimage.measure import compare_ssim

import skimage

from skimage import measure

import matplotlib.pyplot as plt

def imageRegistration():

# open the image files

path = 'D:/Fraunhofer Thesis/LatestPythonImplementations/Import_OCT_Vision/sliceImages(_x_)/'

image1 = cv2.imread(str(path) + '104.png')

image2 = cv2.imread(str(path) + '0.png')

# converting to greyscale

img1 = cv2.cvtColor(image1, cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(image2, cv2.COLOR_BGR2GRAY)

height, width = img2.shape

# Create ORB detector with 5000 features.

orb_detector = cv2.ORB_create(5000)

# Find keypoints and descriptors.

# The first arg is the image, second arg is the mask

# (which is not reqiured in this case).

kp1, d1 = orb_detector.detectAndCompute(img1, None)

kp2, d2 = orb_detector.detectAndCompute(img2, None)

# Match features between the two images.

# We create a Brute Force matcher with

# Hamming distance as measurement mode.

matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# Match the two sets of descriptors.

matches = matcher.match(d1, d2)

# Sort matches on the basis of their Hamming distance.

matches.sort(key=lambda x: x.distance)

# Take the top 90 % matches forward.

matches = matches[:int(len(matches) * 90)]

no_of_matches = len(matches)

# Define empty matrices of shape no_of_matches * 2.

p1 = np.zeros((no_of_matches, 2))

p2 = np.zeros((no_of_matches, 2))

for i in range(len(matches)):

p1[i, :] = kp1[matches[i].queryIdx].pt

p2[i, :] = kp2[matches[i].trainIdx].pt

# Find the homography matrix.

homography, mask = cv2.findHomography(p1, p2, cv2.RANSAC)

# Use this matrix to transform the

# colored image wrt the reference image.

transformed_img = cv2.warpPerspective(image1,

homography, (width, height))

# Save the output.

cv2.imwrite('output.jpg', transformed_img)

#following is the code figuring out difference in the source image, target image and the registered image

# 0 mse means perfect similarity , no difference

# mse >1 means there is difference and as the value increases , the difference increases

def findingDifferenceMSE():

path = 'D:/Fraunhofer Thesis/LatestPythonImplementations/Import_OCT_Vision/sliceImages(_x_)/'

image1 = cv2.imread(str(path) + '104.png')

image2 = cv2.imread(str(path) + '0.png')

image3 = cv2.imread('D:/Fraunhofer Thesis/LatestPythonImplementations/Import_OCT_Vision/output.jpg')

err = np.sum((image1.astype("float") - image3.astype("float")) ** 2)

err /= float(image1.shape[0] * image3.shape[1])

print("MSE:")

print('Image 104 and output image: ', + err)

err1 = np.sum((image2.astype("float") - image3.astype("float")) ** 2)

err1 /= float(image2.shape[0] * image3.shape[1])

print('Image 0 and output image: ', + err1)

def findingDifferenceSSIM():

path = 'D:/Fraunhofer Thesis/LatestPythonImplementations/Import_OCT_Vision/sliceImages(_x_)/'

image1 = cv2.imread(str(path) + '104.png')

image2 = cv2.imread(str(path) + '0.png')

image3 = cv2.imread('D:/Fraunhofer Thesis/LatestPythonImplementations/Import_OCT_Vision/output.jpg')

result1=measure.compare_ssim(image1,image3)

print(result1)

#calling the fucntion

imageRegistration()

findingDifferenceMSE()

#findingDifferenceSSIM()

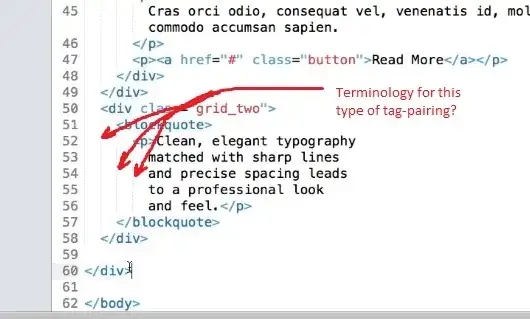

This is the registered image:

This image is the first reference image:

This is the second reference image: