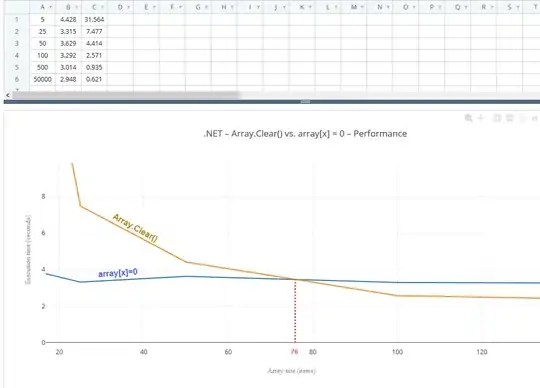

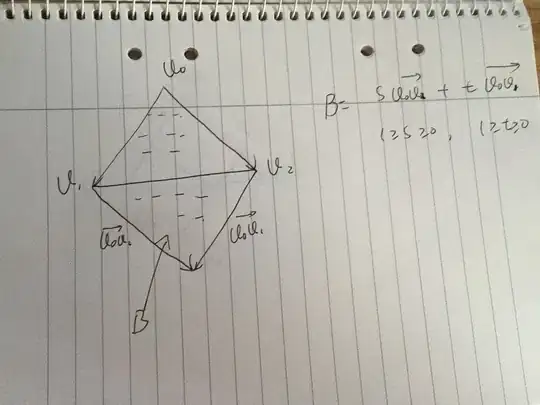

I'm working on a project which involves determining the volume of a transparent liquid (or air if it proves easier) in a confined space.

The images I'm working with are a background image of the container without any liquid and a foreground image which may be also be empty in rare cases, but most times is partly filled with some amount of liquid.

While it may seem like a pretty straightforward smooth and threshold approach, it proves somewhat more difficult. I'm working with a set with tons of these image pairs of background and foreground images, and I can't seem to find an approach that is robust enough to be applied to all images in the set. My work so far involves smoothing and thresholding the image and applying closing to wrap it up.

bg_image = cv.imread("bg_image", 0)

fg_image = cv.imread("fg_image", 0)

blur_fg = cv.GaussianBlur(fg_image, (5, 5), sigmaX=0, sigmaY=0)

thresholded_image = cv.threshold(blur_fg, 186, 255, cv.THRESH_BINARY_INV)[1]

kernel = np.ones((4,2),np.uint8)

closing = cv.morphologyEx(thresholded_image, cv.MORPH_CLOSE, kernel)

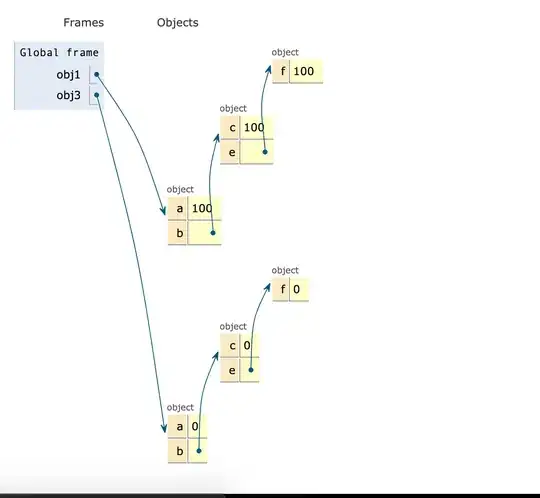

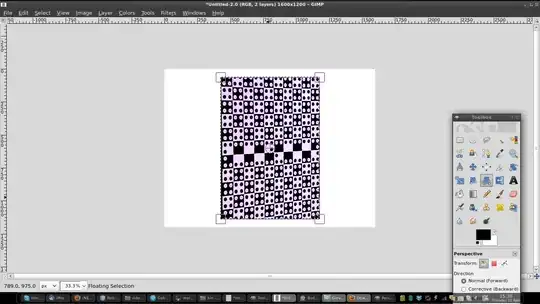

The results vary, here is an example when it goes well:

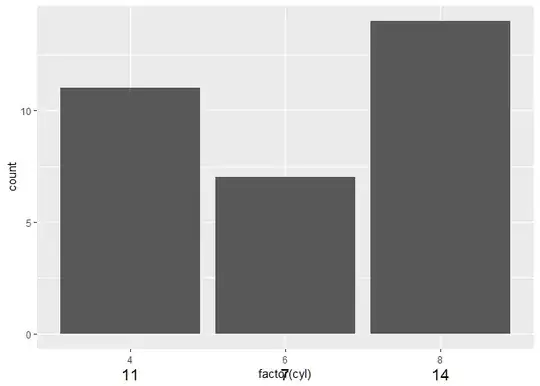

In other examples, it doesn't go as well:

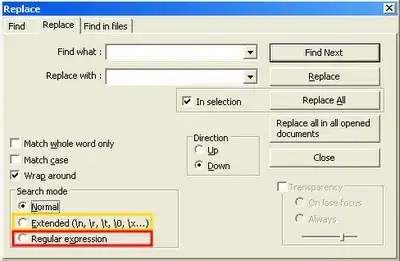

Aside from that, I have also tried:

- Subtraction of the background and foreground images

- Contrast stretching

- Histogram equalization

- Other thresholding techniques such as Otsu

The main issue is that the pixel intensities in air and liquid sometime overlap (and pretty low contrast in general), causing inaccurate estimations. I am leaning towards utilizing the edge that occurs between the liquid and air but I'm not really sure how..

I don't want to overflow with information here so I'm leaving it at that. I am grateful for any suggestions and can provide more information if necessary.

EDIT:

Here are some sample images to play around with.