I am trying to render 3D point cloud from the depth data which I saved from opengl framebuffer. Basically, I took different depth samples from different n viewpoints (which are already known) for the rendered model centered at (0, 0, 0). I successfully saved the depth maps but now I want to extract x, y, z coordinated from these depth maps. For this, I am back projecting point from image to world. To get world coordinates I use the following equation P = K_inv [R|t]_inv * p. to calculate the world coordinates.

To calculate the image intrinsics matrix I used information from the opengl camera matrix, glm::perspective(fov, aspect, near_plane, far_plane). The intrinsic matrix K is calculated as

If I transform the coordinates in camera origin (i.e., no extrinsic transformation [R|t]), I get a 3D model for a single Image. To fuse multiple depths maps, I also need extrinsic transformation which I am calculating as from the OpenGL lookat matrix glm::lookat(eye=n_viewpoint_coorinates, center=(0, 0, 0), up=(0, 1, 0)). The extrisnics matrix is calculated as below (ref: http://ksimek.github.io/2012/08/22/extrinsic/

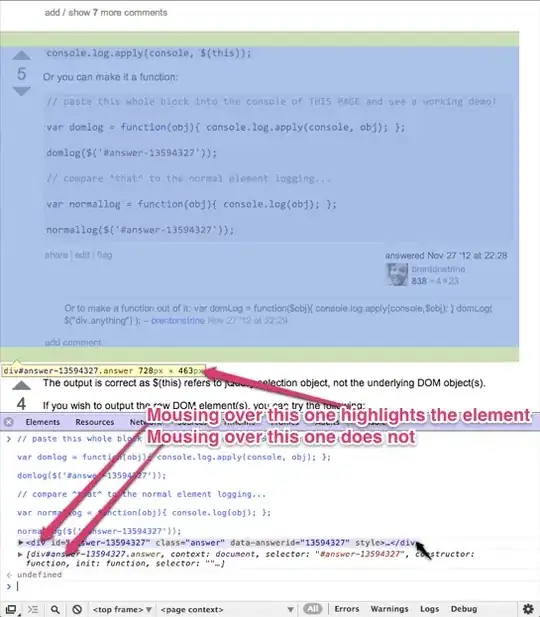

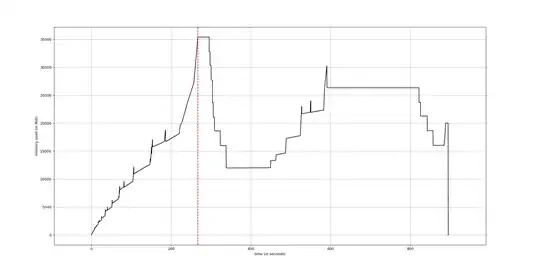

But when I fuse two depth images they are misaligned. I think the extrinsic matrix is not correct. I also tried to use glm::lookat matrix directly but that does not work as well. The fused model snapshot is shown below

Can someone suggest, what is wrong with my approach. Is it the extrinsic matrix that is wrong (which I am damn sure of)?