I'm trying to run my spark program using the spark-submit command (i'm working with scala), i specified the master adress, the class name, the jar file with all dependencies, the input file and then the output file but i'm having and error:

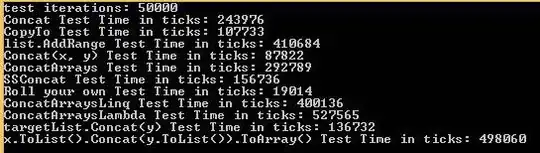

Exception in thread "main" org.apache.spark.sql.AnalysisException: Multiple sources found for csv (org.apache.spark.sql.execution.datasources.v2.csv.CSVDataSourceV2, org.apache.spark.sql.execution.datasources.csv.CSVFileFormat), please specify the fully qualified class name.;

Here is a screenshot for this error, What is it about? How can i fix it?

Thank you