While i exploited that there are only two variables in the other answer, here we focus on doing efficient function-evaluation!

Exploiting the inherent simplicity of the objective allows to reuse your original SLSQP-based optimization while being ready for additional segments / variables in the future (as indicated in a comment) as long as the structure stays the same.

The optimization-cost should approximately be equal to the cost of a single function-evaluation!

Idea

- Original function is as inefficient as possible due to potential memory-allocation and array-copies (e.g.

np.stack())

- Could be improved by storage-order parallel evaluation without memory-ops (see code)

- In general very costly to evaluate due to huge data involved (in each iteration of some optimizer)

- Reformulation possible!

- Do as many calculations as possible a-priori to speed-up calculations depending on

the optimization-variables only!

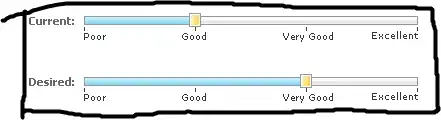

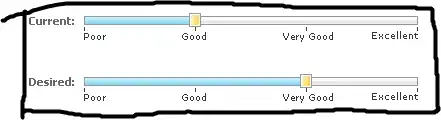

Reformulation is basically following this from WolframAlpha:

Remark:

- The task is presented in matrix-form, but it's really just a flattened / element-wise calculation

- This has been exploited directly before asking WolframAlpha for ideas: see input!

- For comparison:

W1 = xW2 = yX1 = v_i (but 1d)X2 = w_i (but 1d)A = a_i and b_i (decomposed + 1d)

Code

import numpy as np

from scipy.optimize import minimize

from time import perf_counter as pc

np.random.seed(0)

# random fake-data

# ################

segment_1 = np.random.rand(5000, 10000) * 7.13

segment_2 = np.random.rand(5000, 10000) * np.random.normal(size=(5000, 10000))

A = np.random.rand(5000, 20000)

# constraint: probability-simplex

# ###############################

def constraint(x):

return np.sum(x) - 1.

# objective

# #########

# original -> very inefficient due to lots of potential memcopy

# -------------------------------------------------------------

def objective(x):

W1=x[0]

W2=x[1]

X=np.hstack((W1*segment_1, W2*segment_2))

return np.sum((X-A)**2)

# modified -> (hopefully) no memory-allocation at all; (hopefully) storage-order parallel iteration

# -------------------------------------------------------------------------------------------------

def objective_perf(x):

return np.sum( ((x[0] * segment_1) - A[:, :segment_1.shape[1]])**2 ) \

+ np.sum( ((x[1] * segment_2) - A[:, segment_1.shape[1]:])**2 )

# heavily reformulated

# ####################

start_time = pc()

# pre-calc: flatten out matrices as we are doing element-wise reasoning anyway

flat_A_segment_A = A[:, :segment_1.shape[1]].ravel()

flat_A_segment_B = A[:, segment_1.shape[1]:].ravel()

flat_segment_A = segment_1.ravel()

flat_segment_B = segment_2.ravel()

# pre-calc: sub-expressions (see WolframAlpha image!) / sum_squares(vec) = np.dot(vec, vec)

comp_0 = np.dot(flat_A_segment_A, flat_A_segment_A) + np.dot(flat_A_segment_B, flat_A_segment_B)

comp_1 = -2 * np.dot(flat_A_segment_A, flat_segment_A)

comp_2 = -2 * np.dot(flat_A_segment_B, flat_segment_B)

comp_3 = np.dot(flat_segment_A, flat_segment_A)

comp_4 = np.dot(flat_segment_B, flat_segment_B)

end_time = pc()

print('pre-calc secs: {}\n'.format(end_time - start_time))

# pre-calc based function-eval / basically *for free*

def objective_high_perf(x):

return comp_0 + x[0] * comp_1 + x[1] * comp_2 + x[0]**2 * comp_3 + x[1]**2 * comp_4

# SLSQP-based solving

# -------------------

cons = {'type': 'eq', 'fun': constraint}

x0 = [1.0, 0.0]

print('-----\nVariant: "objective"\n-----')

start_time = pc()

sol = minimize(objective_perf, x0, method='SLSQP', constraints=cons)

end_time = pc()

print(sol)

print('secs: {}\n'.format(end_time - start_time))

print('-----\nVariant: "objective_perf"\n-----')

start_time = pc()

sol = minimize(objective_perf, x0, method='SLSQP', constraints=cons)

end_time = pc()

print(sol)

print('secs: {}\n'.format(end_time - start_time))

print('-----\nVariant: "objective_high_perf"\n-----')

start_time = pc()

sol = minimize(objective_high_perf, x0, method='SLSQP', constraints=cons)

end_time = pc()

print(sol)

print('secs: {}\n'.format(end_time - start_time))

Output (not on full data due to memory on tablet-like device)

pre-calc secs: 1.1280025999999999

-----

Variant: "objective"

-----

fun: 37044840.62293503

jac: array([29253964., 29253786.])

message: 'Optimization terminated successfully'

nfev: 16

nit: 2

njev: 2

status: 0

success: True

x: array([0.12245548, 0.87754452])

secs: 49.2468216

-----

Variant: "objective_perf"

-----

fun: 37044840.62293503

jac: array([29253964., 29253786.])

message: 'Optimization terminated successfully'

nfev: 16

nit: 2

njev: 2

status: 0

success: True

x: array([0.12245548, 0.87754452])

secs: 49.036501799999996

-----

Variant: "objective_high_perf"

-----

fun: 37044840.622934975

jac: array([29253784. , 29253777.5])

message: 'Optimization terminated successfully'

nfev: 15

nit: 2

njev: 2

status: 0

success: True

x: array([0.12245547, 0.87754453])

secs: 0.010043600000003039

Expectation

I would guess your 10 minute run should be < 10 secs now.

In my example, ~50 secs have been reduced to ~1.13 + ~0.01 = ~1.14 secs

.

.