You can try to build a model of the background and then weight each input pixel by that model. The output gain should be relatively constant during most of the image. These are the steps for this method:

- Apply a soft median blur filter to get rid of small noise

- Get the model of the background via local maximum. Apply a very strong

close operation, with a big structuring element (I’m using a rectangular kernel of size 15)

- Perform gain adjustment by dividing

255 between each local maximum pixel. Weight this value with each input image pixel.

- You should get a nice image where the background illumination is pretty much normalized,

threshold this image to get a binary mask of the text

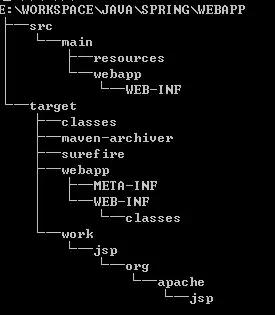

This is the code:

import numpy as np

import cv2

# image path

path = "C:/opencvImages/sheet01.jpg"

# Read an image in default mode:

inputImage = cv2.imread(path)

# Remove small noise via median:

filterSize = 5

imageMedian = cv2.medianBlur(inputImage, filterSize)

# Get local maximum:

kernelSize = 15

maxKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (kernelSize, kernelSize))

localMax = cv2.morphologyEx(imageMedian, cv2.MORPH_CLOSE, maxKernel, None, None, 1, cv2.BORDER_REFLECT101)

# Adjust image gain:

height, width, depth = localMax.shape

# Create output Mat:

outputImage = np.zeros(shape=[height, width, depth], dtype=np.uint8)

for i in range(0, height):

for j in range(0, width):

# Get current BGR pixels:

v1 = inputImage[i, j]

v2 = localMax[i, j]

# Gain adjust:

tempArray = []

for c in range(0, 3):

currentPixel = v2[c]

if currentPixel != 0:

gain = 255 / v2[c]

gain = v1[c] * gain

else:

gain = 0

# Gain set and clamp:

tempArray.append(np.clip(gain, 0, 255))

# Set pixel vec to out image:

outputImage[i, j] = tempArray

# Convert RGB to grayscale:

grayscaleImage = cv2.cvtColor(outputImage, cv2.COLOR_BGR2GRAY)

# Threshold:

threshValue = 110

_, binaryImage = cv2.threshold(grayscaleImage, threshValue, 255, cv2.THRESH_BINARY)

# Write image:

imageFilename = "C:/opencvImages/binaryMask2.png"

cv2.imwrite(imageFilename, binaryImage)

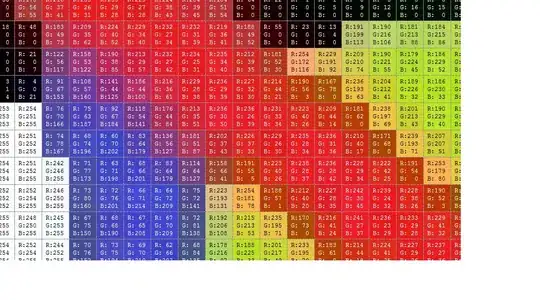

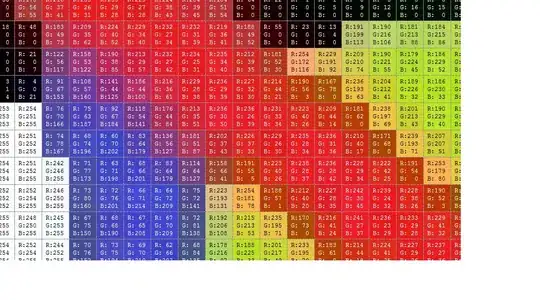

I get the following results testing the complete image:

And the cropped text:

Please note that the gain adjustment operations are not vectorized. The script is slow, mainly because I'm starting with Python and don’t know the proper Numpy syntax to speed-up this operation. I've been using C++ for a long time, so feel free to further improve the code.

Edit:

Please, be aware that your result can only be as good as the quality of your input. See your input and ask yourself "Is this a good input for an automated process?" (Automated processes are usually not very smart). The second picture you posted is very low quality. Not only is blurry but also is low res and has compression artifacts. All these factors will hinder automated processing.

With that said, here's an improvement you can include in the original:

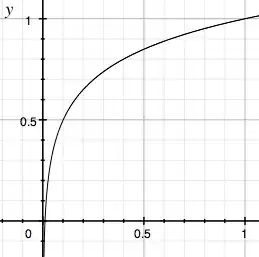

Try to normalize brightness-contrast on the grayscale output:

grayscaleImage = np.uint8(cv2.normalize(grayscaleImage, grayscaleImage, 0, 255, cv2.NORM_MINMAX))

Your grayscale image goes from this:

to this:

A little bit darker and improved on contrast. Let's try to compute the optimal threshold value automatically via Otsu thresholding:

threshValue, binaryImage = cv2.threshold(grayscaleImage, 0, 255, cv2.THRESH_BINARY+cv2.THRESH_OTSU)

It gets you this:

However, we can adjust the result if we add bias to Otsu's threshold, like this:

threshValue, binaryImage = cv2.threshold(grayscaleImage, 0, 255, cv2.THRESH_BINARY+cv2.THRESH_OTSU)

bias = 0.9

threshValue = bias * threshValue

_, binaryImage = cv2.threshold(grayscaleImage, threshValue, 255, cv2.THRESH_BINARY)

That's the best quality you can get with these images using this method.

If you find these suggestions and tips useful, please, at least up-vote my answer.