Below is the following dataframe reporting the results of training a dataset on a binary classification problem:

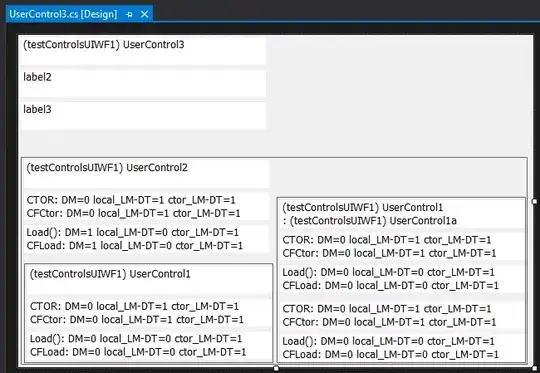

columns a and b represent x and y respectively and the structure of the neural network is as follows:

the columns h1, h2, h3 and o represent the outputs of the nodes after the sigmoid activation funtion as of the network in the figure above, while delta_h1, delta_h2, delta_h3 and delta_o represent the errors of the nodes. Considering for example the first line we have that the output of the neural network is o = 0.45196 while the true value is t=1. Calculating the error according to the binary cross entropy we have:

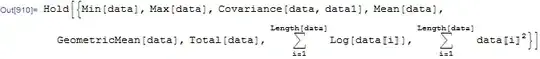

cost= -log(0.45196) = 0.3449

from which it should appear that:

delta_o = 0.45196-0.3449 = 0.10706

instead in the results file it says delta_o = 0.13575. I don't know if my reasoning is right can you tell me where I'm getting wrong? Thank you!