I have an application being built using AWS AppSync with a primary focus of sending telemetry data from a mobile application. I am stuck on how to partition and structure the DynamoDB tables for this as the users of the application belong to different organizations, in those organizations there will be admins who are able to view the data specific to their organization.

OrganizationA

-->Admin # View all the telemetry data

---->User # Send the telemetry data from their mobile application

Based on some research from these resources,

The advised manner is to create tables for individual periods i.e., a table for every day with the telemetry readings.

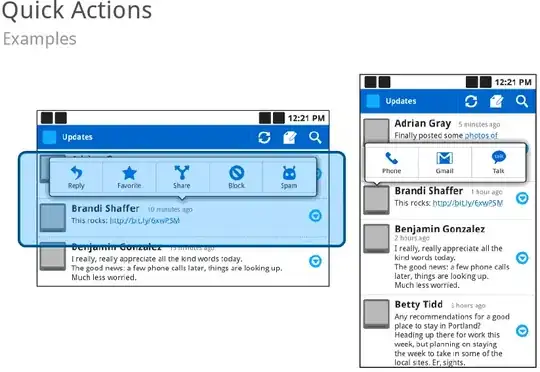

Example(not sure what pk is in this example):

The way in which I am planning to separate the users using AWS Cognito is by attaching a custom attribute when the user signs up such as Organization and Role(Admin or User) as per this answer then use a Pre-Signup Lambda Trigger.

How should I achieve this?