UPDATE

OK, so re-reading through the original poster's question I realised that firstly I had mis-understood their requirements, but then I also noticed their comment on Kailan's answer which also slightly adapted the requirements.

So my understanding is that the requirements are now...

- Modify the backend depending on the incoming request path.

- Modify the incoming request path without affecting caching (e.g. the original request path will be what's used in the cache lookup).

For example, if our default backend is httpbin.org and the incoming request is to /services/users then we want the backend to change to another backend (we'll say bbc.co.uk).

But we also want the path to change from /services/user/ to /, while the response from the bbc.co.uk backend to be cached under the original request path of /services/users/.

Here is a fiddle that demonstrates this:

https://fiddle.fastlydemo.net/fiddle/e337b8f5

Note: the reason you can't set a string to req.backend is because it needs to be assigned a specific BACKEND type.

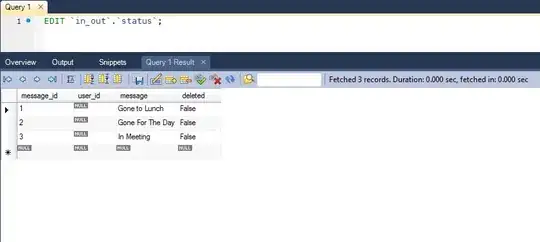

In case the fiddle link stops working, the code was as follows...

vcl_recv

if (req.url.path ~ "^/services/user/" ) {

// pretend www.bbc.co.uk is the correct backend for handling a 'user' request

set req.backend = F_origin_1;

}

Note: backends are assigned to incrementing variables F_origin_0, F_origin_1 etc. So in my fiddle example F_origin_0 is httpbin.org while F_origin_1 is bbc.co.uk

vcl_miss

if (req.url.path ~ "^/services/.*") {

set bereq.url = "/";

}

MY ORIGINAL ANSWER

Kailan's answer is correct and should be marked as such, but for completeness I'm going to flesh it out a bit more so the original poster has a clearer conceptual understanding of what's happening...

An incoming request to Varnish is turned into a req object that is passed through various states/subroutines (e.g. vcl_recv == request received, vcl_deliver == request has been processed and a response is ready to be sent back to the client, etc).

Your conditional logic should be added to the vcl_recv subroutine as that is the first 'state' for the incoming request, and so is the earliest point at which you can modify the request.

The flow of the request object through Varnish can be controlled using the return(<state>) directive, which indicates what state to move to next. Varnish has a default behaviour which means you don't need to explicitly call return at the end of each available subroutine (one will be called for you that will move your request onto the next logical state/subroutine) so your decision to explicitly change the default request flow using return will depend on what you're trying to do (in your case you don't need to worry about this).

A typical/default request flow would have the request object move from vcl_recv to vcl_hash so Varnish can generate a 'lookup' key (which is used to lookup a previously cached version of the request), then depending on if a cached version of the request was found the request will move to either vcl_miss (no cached version found) or vcl_hit (a cached version was found).

If there was no cached content, then after vcl_miss a request will be made to your backend/origin server and the response from the backend will be provided to vcl_fetch as a beresp object. Finally, the response is passed onto vcl_deliver where you can modify the response before it's sent back to the client.

The request (req) object is passed through all of these states/subroutines, meaning you can access it at any point during the overall request flow.

If you need more guidance regarding the various VCL subroutines (why they exist, and what you can modify within each of them), then Fastly have them all documented here (along with a nice 'request flow' diagram): https://developer.fastly.com/learning/vcl/using/