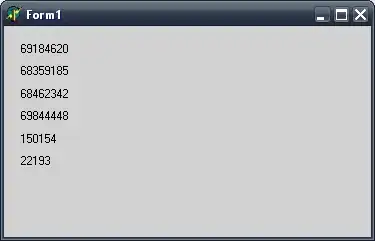

Good day. I am trying to plot timeseries with large data 2 billions of points and embed this plot into PyQt5. The Holoviews manual has the whole section on working with large data Working with large data using datashader. I am using the timeseries example from this chapter but when I run it the plot does not show. I tried python from VS Code and run python from Jupyter notebook. I am missing something...

import datashader as ds

import numpy as np

import holoviews as hv

import pandas as pd

from holoviews import opts

from holoviews.operation.datashader import datashade, shade, dynspread, spread, rasterize

from holoviews.operation import decimate

hv.extension('bokeh','matplotlib')

def time_series(T = 1, N = 100, mu = 0.1, sigma = 0.1, S0 = 20):

"""Parameterized noisy time series"""

dt = float(T)/N

t = np.linspace(0, T, N)

W = np.random.standard_normal(size = N)

W = np.cumsum(W)*np.sqrt(dt) # standard brownian motion

X = (mu-0.5*sigma**2)*t + sigma*W

S = S0*np.exp(X) # geometric brownian motion

return S

points = [(0.1*i, np.sin(0.1*i)) for i in range(100)]

hv.Curve(points)

hv.HoloMap({i: hv.Curve([1, 2, 3-i], group='Group', label='Label') for i in range(3)}, 'Value')

dates = pd.date_range(start="2014-01-01", end="2016-01-01", freq='1D') # or '1min'

curve = hv.Curve((dates, time_series(N=len(dates), sigma = 1)))

rasterize(curve, width=800).opts(width=800, cmap=['blue'])