I am trying to understand why this code starts working slower with large 1.8 GB file when parts variable is increasing, so more reads and writes are performed in a single iteration. The function is below. In and out files are created with CreateFile() with FILE_FLAG_OVERLAPPED and FILE_FLAG_NO_BUFFERING flags. Time is measured for 8 KB buffer. Completion routine for reading just increases global variable g_readers by one, and completion routine for writing does the same for g_writers.

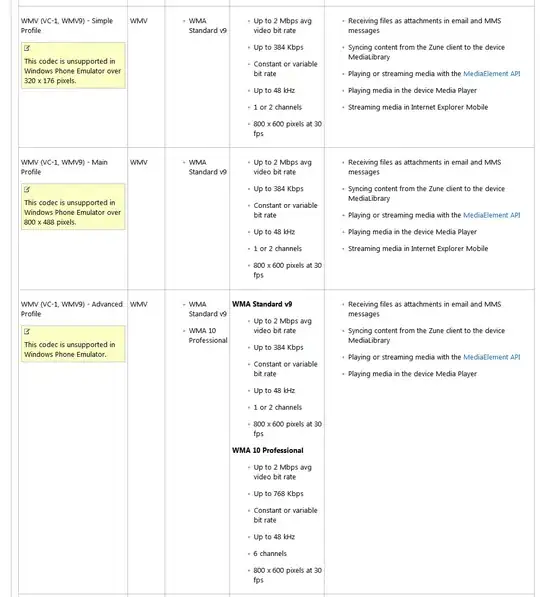

| 1 IO | 2 IOs | 4 IOs | 8 IOs | 12 IOs | 16 IOs | |

|---|---|---|---|---|---|---|

| 1.8 GB | 92 s | 92 s | 59s | 150 s | 268 s | 376 s |

| 121 MB | 7532 ms | 7484 ms | 4034 ms | 2975 ms | 2197 ms | 2178 ms |

| 4.7 MB | 289 ms | 274 ms | 171 ms | 121 ms | 100 ms | 78 ms |

I am using a SATA SSD Samsung 860 evo with NTFS for it. Results with exFAT HDD are just slower, otherwise all the same.

I've read on learn.microsoft.com, that asynchronous IO was intended to use when it is needed to make some IO while making some other calculations, but there is little information on how starting multiple IO operations works. As far as I understand, when making more reads and writes in a single iteration there shouldn't be dramatic changes, as overall amount of work is the same, as there is no parallelism. I've tried to start writing in reading completion routine, but there was no measurable changes for all three cases.

...

void _copyFileByParts(HANDLE existingFile, HANDLE newFile, DWORD nNumberOfBytesToRead, int parts) {

fileSize = GetFileSize(existingFile, NULL);

buff = new BYTE[fileSize];

newFile = newFile;

offsets = new DWORD[parts];

OVERLAPPED* overlapped = new OVERLAPPED[parts];

for (int i = 0; i < parts; i++) {

offsets[i] = i * fileSize / nNumberOfBytesToRead / parts * nNumberOfBytesToRead;

overlapped[i].Internal = NULL;

overlapped[i].InternalHigh = NULL;

overlapped[i].hEvent = (HANDLE) i;

overlapped[i].Offset = offsets[i];

overlapped[i].OffsetHigh = 0;

}

int leftToCopy = fileSize - offsets[parts - 1];

do {

g_readers = 0;

g_writers = 0;

for (int i = 0; i < parts; i++) {

ReadFileEx(

existingFile,

buff + offsets[i],

nNumberOfBytesToRead,

&overlapped[i],

(LPOVERLAPPED_COMPLETION_ROUTINE)LPCompletionReading

);

}

while (g_readers < parts)

SleepEx(INFINITE, TRUE);

for (int i = 0; i < parts; i++) {

WriteFileEx(

newFile,

buff + offsets[i],

nNumberOfBytesToRead,

&overlapped[i],

(LPOVERLAPPED_COMPLETION_ROUTINE)LPCompletionWriting);

}

while (g_writers < parts)

SleepEx(INFINITE, TRUE);

for (int i = 0; i < parts; i++) {

overlapped[i].Offset += nNumberOfBytesToRead;

}

leftToCopy -= nNumberOfBytesToRead;

} while (leftToCopy > 0);

SetFilePointer(newFile, fileSize, NULL, FILE_BEGIN);

SetEndOfFile(newFile);

delete buff;

delete overlapped;

}