I use this fragent shader (inspired from some tutorial found on the NVIDIA site some time ago). It basically compute bi-linear interpolation of a 2D texture.

uniform sampler2D myTexture;

uniform vec2 textureDimension;

#define texel_size_x 1.0 / textureDimension[0]

#define texel_size_y 1.0 / textureDimension[1]

vec4 texture2D_bilinear( sampler2D texture, vec2 uv)

{

vec2 f;

uv = uv + vec2( - texel_size_x / 2.0, - texel_size_y / 2.0);

f.x = fract( uv.x * textureDimension[0]);

f.y = fract( uv.y * textureDimension[1]);

vec4 t00 = texture2D( texture, vec2(uv));

vec4 t10 = texture2D( texture, vec2(uv) + vec2( texel_size_x, 0));

vec4 tA = mix( t00, t10, f.x);

vec4 t01 = texture2D( texture, vec2(uv) + vec2( 0, texel_size_y));

vec4 t11 = texture2D( texture, vec2(uv) + vec2( texel_size_x, texel_size_y));

vec4 tB = mix( t01, t11, f.x);

vec4 result = mix( tA, tB, f.y);

return result;

}

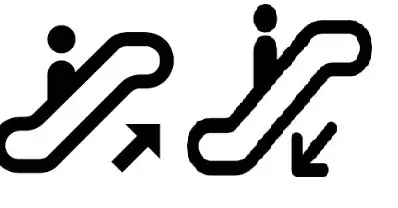

It looks quite simple and strait-forward. I recently test it on several ATI cards (latest drivers ...) and I get the following result :

(Left : Nearest neighbor) (Right : sharder in use)

As you can see some horizontal and vertical lines appear it's important to mention these are not fixed in the view-port coordinates neither in the texture coordinates.

I had to port several shaders to make them work correctly on ATI cards, it's seems NVIDIA implementation is a little more permissive regarding bad code I wrote some time. But in this case I don't see what I should change !

Anything general I should know about differences between NVIDIA and ATI GLSL implementation to overcome this ?