I am using Text Mining with R: A Tidy Approach by Julia Silge & David Robinson to try to bind and graph two books, the first by Jane Austen (Persuasion, for which read "persua"), the second by Charlotte Bronte (for which read "janeyre"), in order to compare them and graph them, according to word frequency. Afterwards, I would like to add the complete corpus of 6 books by Austen and 4 books by Charlotte Bronte because I am trying to understand the CONSISTENCY OVER TIME OF AUTHORIAL IDIOLECT.

I have tried to modify some of the code found in the first chapter of Silge & Robinson's book in order to do this, from the section on word frequency.

library(tidyverse)

library(dplyr)

library(gutenbergr)

library(tidytext)

library(stringr)

library(ggplot2)

persua <- gutenberg_download(c(105))

tidy_persua <- persua %>%

unnest_tokens(word, text) %>%

count(word, sort = TRUE)

library(dplyr)

library(gutenbergr)

library(tidytext)

janeyre<- gutenberg_download(c(1260))

tidy_janeyre <- janeyre%>%

unnest_tokens(word, text) %>%

count(word, sort = TRUE)

library(tidyr)

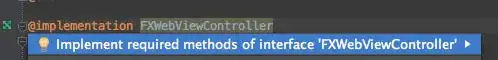

frequency <- bind_rows(mutate(tidy_persua, author = "Jane Austen"),

(mutate(tidy_janeyre, author = "Charlotte Bronte")) %>%

mutate(word = str_extract(word, "[a-z']+")) %>%

count(author, word) %>%

group_by(author) %>%

mutate(proportion = n / sum(n)) %>%

select(-n) %>%

spread(author, proportion) %>%

gather(author, proportion, Charlotte Bronte : Jane Austen)

library(scales)

ggplot(frequency, aes(x = proportion, y = `Jane Austen`,

color = abs(`Jane Austen` - proportion))) +

geom_abline(color = "gray40", lty = 2) +

geom_jitter(alpha = 0.1, size = 2.5, width = 0.3, height = 0.3) +

geom_text(aes(label = word), check_overlap = TRUE, vjust = 1.5) +

scale_x_log10(labels = percent_format()) +

scale_y_log10(labels = percent_format()) +

scale_color_gradient(limits = c(0, 0.001),

low = "darkslategray4", high = "gray75") +

facet_wrap(~author, ncol = 2) +

theme(legend.position="none") +

labs(y = "Jane Austen", x = NULL)

However, in the last sequence of codes I get a number of error messages that simply confuse me because I don't have enough experience of working with code in R.