UPDATE:

Thank you all very much for your answers. As Jesse Hall suggested, it looks like it is a driver (or hardware) problem. I tried the same app on other configurations and it worked as expected.

I tested the app on other computers which share the same GPU (ATI 4800 HD) but different versions of the driver and they all showed the same erroneous behavior (what seems to be a double gamma correction on write). On these computers, if have to set D3DRS_SRGBWRITEENABLE to false to fix the display. Anyone knows if this is a known bug on this hardware?

Even more strange is that I get the same end results with these two configurations:

- D3DRS_SRGBWRITEENABLE = FALSE and D3DSAMP_SRGBTEXTURE to TRUE

- D3DRS_SRGBWRITEENABLE = FALSE and D3DSAMP_SRGBTEXTURE to FALSE

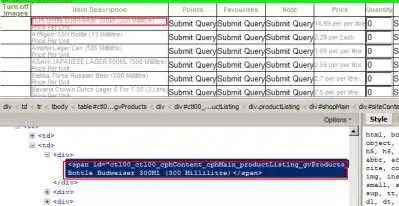

In the pixel debugger, I see that linearization is applied properly in case 1 but (automatic) correction on write gives the same output as case 2 (which performs no conversion at all)...

// -- END OF UPDATE

I'm having some trouble fixing the gamma correction of a DirectX9 application.

When I enable texture linearization in the samplers (D3DSAMP_SRGBTEXTURE) and sRGB write for output (D3DRS_SRGBWRITEENABLE), it looks like gamma correction is applied twice.

Here is my setup. I used the following texture (from here) to draw a fullscreen quad:

The results were visually too bright on the right side of the picture. I used PIX to debug one of those grey pixels and, if everything was set up properly, I would have expected an output value of 0.73 (=0.5^(1.0/2.2)). Unfortunately, the output of the pixel shader was 0.871 (which looks like it could be a gamma correction applied twice ?). I stepped inside the pixel shader with the debugger and the texture fetch returned a value of (0.491, 0.491, 0.491), which should mean linearization on read worked properly.

When I disable D3DRS_SRGBWRITEENABLE, the output of the pixel shader is 0.729 which looks much more correct to me.

Any idea where does this conversion come from (in the debugger the pixel shader output was 0.491)? What other flags/render states should I check?

Thank you very much for your help!